ONIX: an X-ray deep-learning tool for 3D reconstructions from sparse views

Paper and Code

Mar 01, 2022

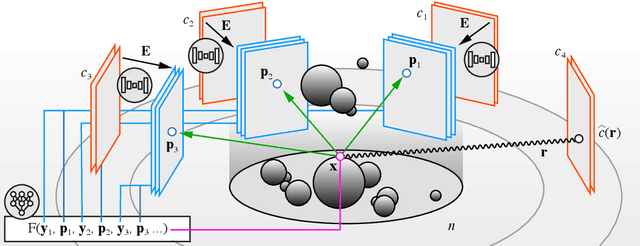

Three-dimensional (3D) X-ray imaging techniques like tomography and confocal microscopy are crucial for academic and industrial applications. These approaches access 3D information by scanning the sample with respect to the X-ray source. However, the scanning process limits the temporal resolution when studying dynamics and is not feasible for some applications, such as surgical guidance in medical applications. Alternatives to obtaining 3D information when scanning is not possible are X-ray stereoscopy and multi-projection imaging. However, these approaches suffer from limited volumetric information as they only acquire a small number of views or projections compared to traditional 3D scanning techniques. Here, we present ONIX (Optimized Neural Implicit X-ray imaging), a deep-learning algorithm capable of retrieving 3D objects with arbitrary large resolution from only a set of sparse projections. ONIX, although it does not have access to any volumetric information, outperforms current 3D reconstruction approaches because it includes the physics of image formation with X-rays, and it generalizes across different experiments over similar samples to overcome the limited volumetric information provided by sparse views. We demonstrate the capabilities of ONIX compared to state-of-the-art tomographic reconstruction algorithms by applying it to simulated and experimental datasets, where a maximum of eight projections are acquired. We anticipate that ONIX will become a crucial tool for the X-ray community by i) enabling the study of fast dynamics not possible today when implemented together with X-ray multi-projection imaging, and ii) enhancing the volumetric information and capabilities of X-ray stereoscopic imaging in medical applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge