One-Shot Federated Learning for Model Clustering and Learning in Heterogeneous Environments

Paper and Code

Sep 22, 2022

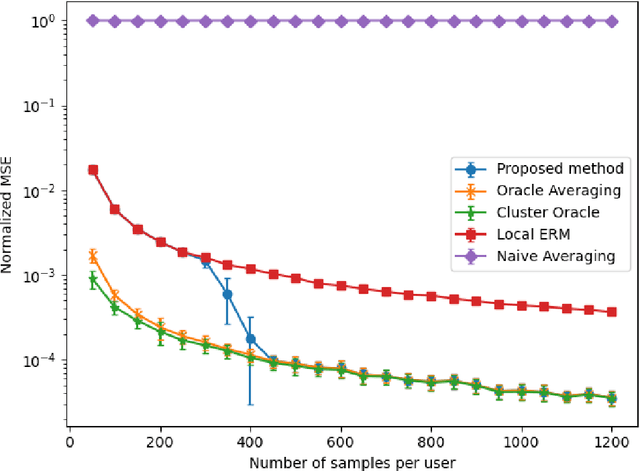

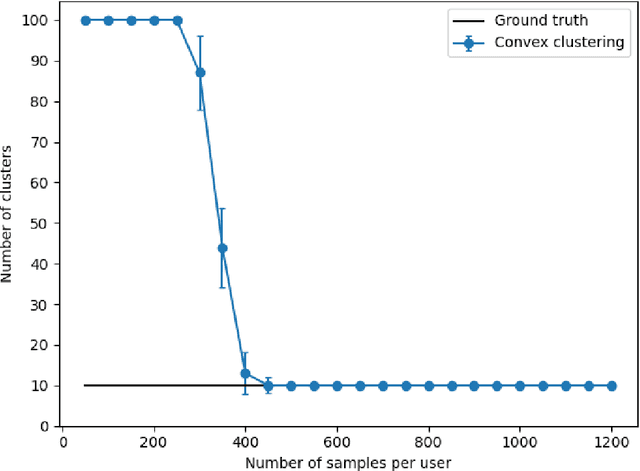

We propose a communication efficient approach for federated learning in heterogeneous environments. The system heterogeneity is reflected in the presence of $K$ different data distributions, with each user sampling data from only one of $K$ distributions. The proposed approach requires only one communication round between the users and server, thus significantly reducing the communication cost. Moreover, the proposed method provides strong learning guarantees in heterogeneous environments, by achieving the optimal mean-squared error (MSE) rates in terms of the sample size, i.e., matching the MSE guarantees achieved by learning on all data points belonging to users with the same data distribution, provided that the number of data points per user is above a threshold that we explicitly characterize in terms of system parameters. Remarkably, this is achieved without requiring any knowledge of the underlying distributions, or even the true number of distributions $K$. Numerical experiments illustrate our findings and underline the performance of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge