One-shot distributed ridge regression in high dimensions

Paper and Code

Mar 22, 2019

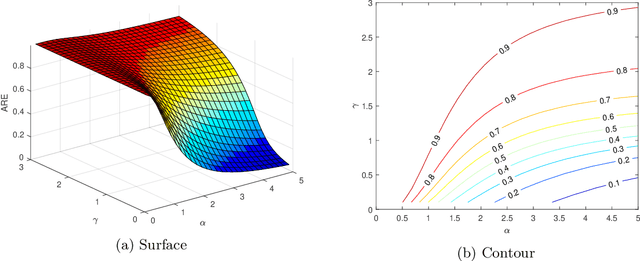

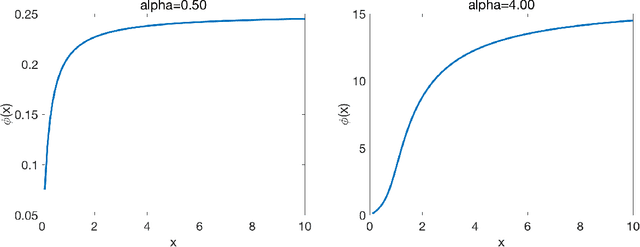

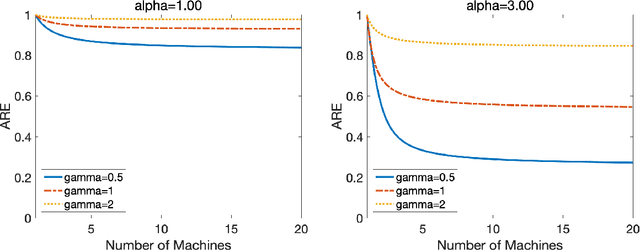

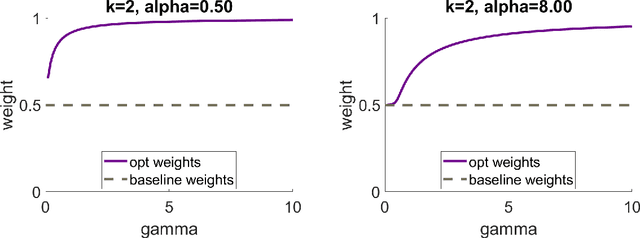

In many areas, practitioners need to analyze large datasets that challenge conventional single-machine computing. To scale up data analysis, distributed and parallel computing approaches are increasingly needed. Datasets are spread out over several computing units, which do most of the analysis locally, and communicate short messages. Here we study a fundamental and highly important problem in this area: How to do ridge regression in a distributed computing environment? Ridge regression is an extremely popular method for supervised learning, and has several optimality properties, thus it is important to study. We study one-shot methods that construct weighted combinations of ridge regression estimators computed on each machine. By analyzing the mean squared error in a high dimensional random-effects model where each predictor has a small effect, we discover several new phenomena. 1. Infinite-worker limit: The distributed estimator works well for very large numbers of machines, a phenomenon we call "infinite-worker limit". 2. Optimal weights: The optimal weights for combining local estimators sum to more than unity, due to the downward bias of ridge. Thus, all averaging methods are suboptimal. We also propose a new optimally weighted one-shot ridge regression algorithm. We confirm our results in simulation studies and using the Million Song Dataset as an example. There we can save at least 100x in computation time, while nearly preserving test accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge