On the Usefulness of the Fit-on-the-Test View on Evaluating Calibration of Classifiers

Paper and Code

Mar 21, 2022

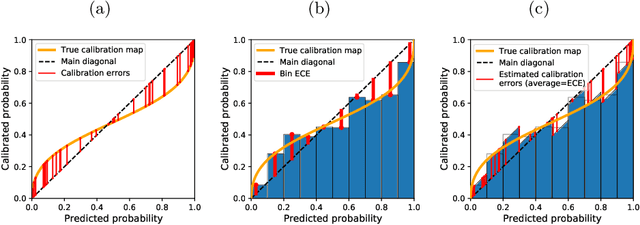

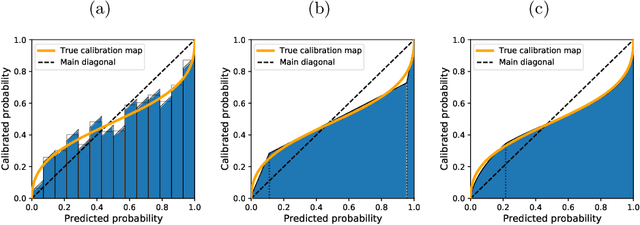

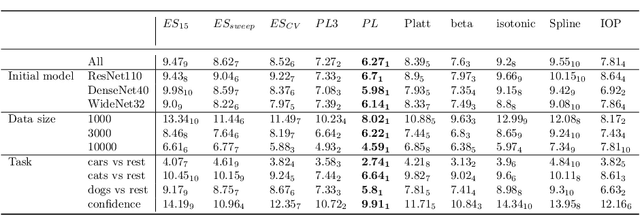

Every uncalibrated classifier has a corresponding true calibration map that calibrates its confidence. Deviations of this idealistic map from the identity map reveal miscalibration. Such calibration errors can be reduced with many post-hoc calibration methods which fit some family of calibration maps on a validation dataset. In contrast, evaluation of calibration with the expected calibration error (ECE) on the test set does not explicitly involve fitting. However, as we demonstrate, ECE can still be viewed as if fitting a family of functions on the test data. This motivates the fit-on-the-test view on evaluation: first, approximate a calibration map on the test data, and second, quantify its distance from the identity. Exploiting this view allows us to unlock missed opportunities: (1) use the plethora of post-hoc calibration methods for evaluating calibration; (2) tune the number of bins in ECE with cross-validation. Furthermore, we introduce: (3) benchmarking on pseudo-real data where the true calibration map can be estimated very precisely; and (4) novel calibration and evaluation methods using new calibration map families PL and PL3.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge