On the Robustness and Generalization of Deep Learning Driven Full Waveform Inversion

Paper and Code

Nov 28, 2021

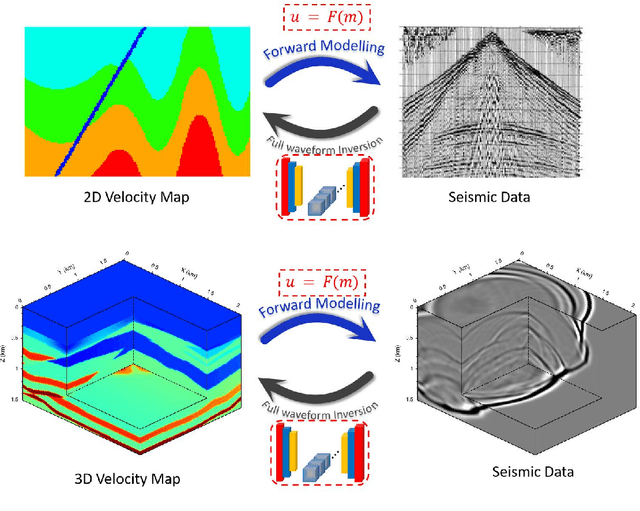

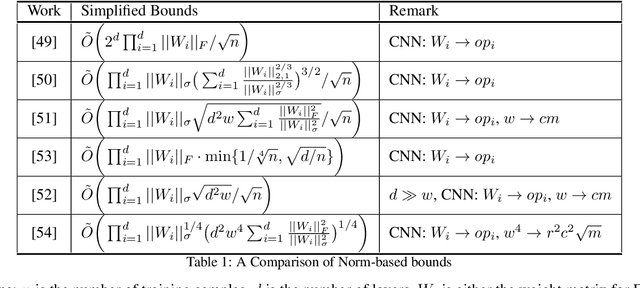

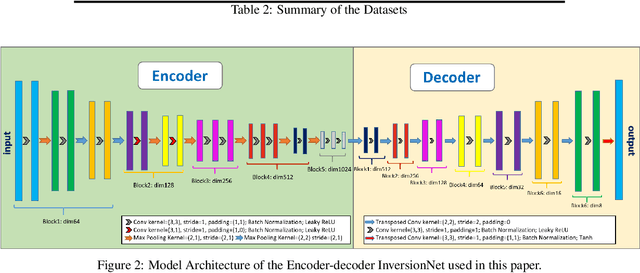

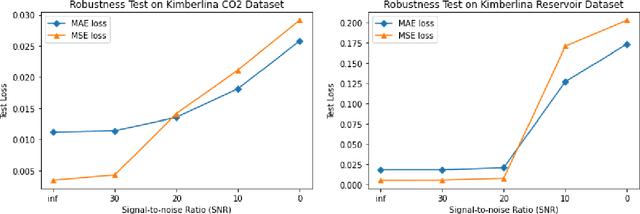

The data-driven approach has been demonstrated as a promising technique to solve complicated scientific problems. Full Waveform Inversion (FWI) is commonly epitomized as an image-to-image translation task, which motivates the use of deep neural networks as an end-to-end solution. Despite being trained with synthetic data, the deep learning-driven FWI is expected to perform well when evaluated with sufficient real-world data. In this paper, we study such properties by asking: how robust are these deep neural networks and how do they generalize? For robustness, we prove the upper bounds of the deviation between the predictions from clean and noisy data. Moreover, we demonstrate an interplay between the noise level and the additional gain of loss. For generalization, we prove a norm-based generalization error upper bound via a stability-generalization framework. Experimental results on seismic FWI datasets corroborate with the theoretical results, shedding light on a better understanding of utilizing Deep Learning for complicated scientific applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge