On the reproducibility of fully convolutional neural networks for modeling time-space evolving physical systems

Paper and Code

May 12, 2021

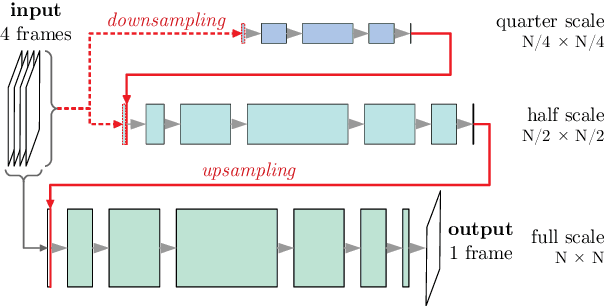

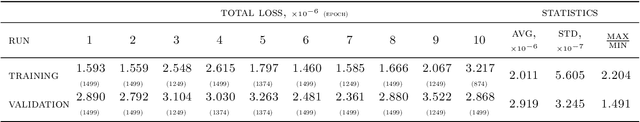

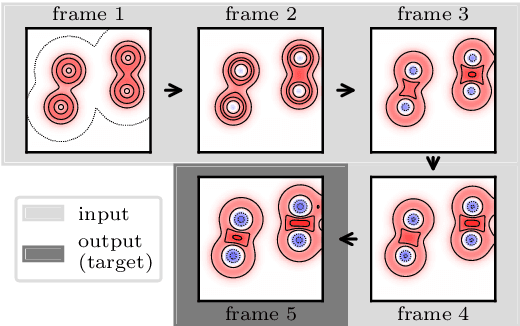

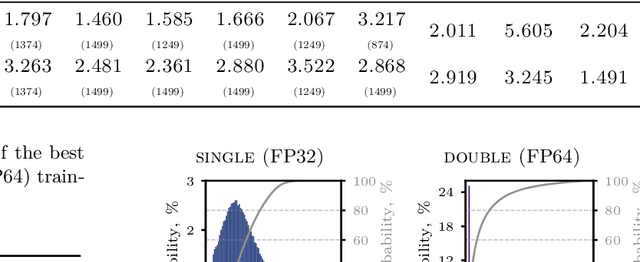

Reproducibility of a deep-learning fully convolutional neural network is evaluated by training several times the same network on identical conditions (database, hyperparameters, hardware) with non-deterministic Graphics Processings Unit (GPU) operations. The propagation of two-dimensional acoustic waves, typical of time-space evolving physical systems, is studied on both recursive and non-recursive tasks. Significant changes in models properties (weights, featured fields) are observed. When tested on various propagation benchmarks, these models systematically returned estimations with a high level of deviation, especially for the recurrent analysis which strongly amplifies variability due to the non-determinism. Trainings performed with double floating-point precision provide slightly better estimations and a significant reduction of the variability of both the network parameters and its testing error range.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge