On the price of explainability for some clustering problems

Paper and Code

Jan 05, 2021

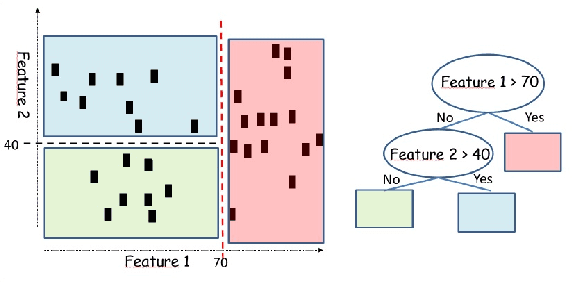

Machine learning models and algorithms are used in a number of systems that affect our daily life. Thus, in some settings, methods that are easy to explain or interpret may be highly desirable. The price of explainability can be thought of as the loss in terms of quality that is unavoidable if we restrict these systems to use explainable methods. We study the price of explainability, under a theoretical perspective, for clustering tasks. We provide upper and lower bounds on this price as well as efficient algorithms to build explainable clustering for the $k$-means, $k$-medians, $k$-center and the maximum-spacing problems in a natural model in which explainability is achieved via decision trees.

* 19 pages, 1 figure

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge