On the Interpretability of Deep Learning Based Models for Knowledge Tracing

Paper and Code

Jan 27, 2021

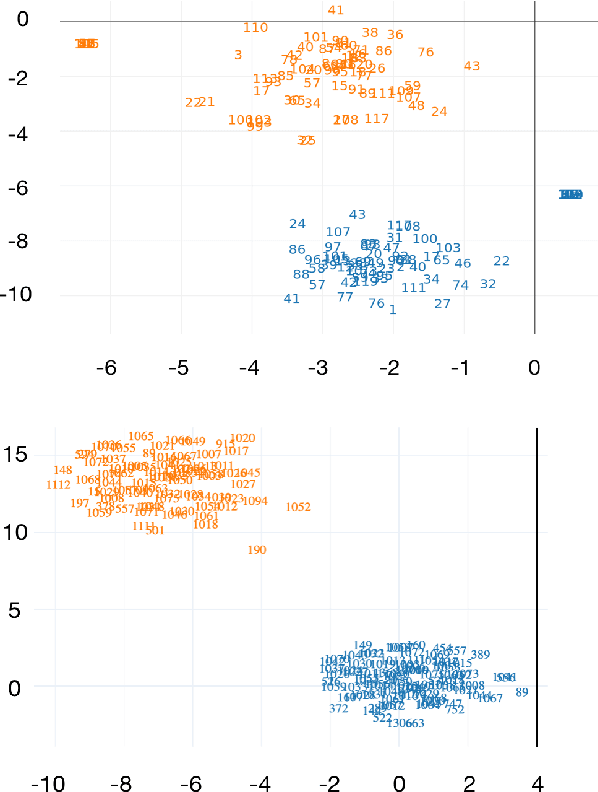

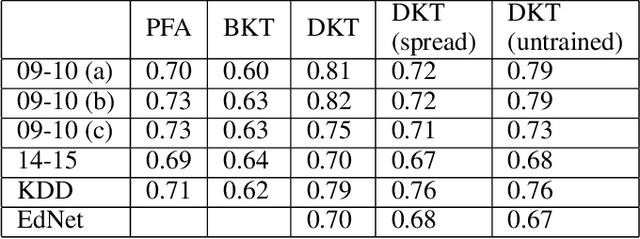

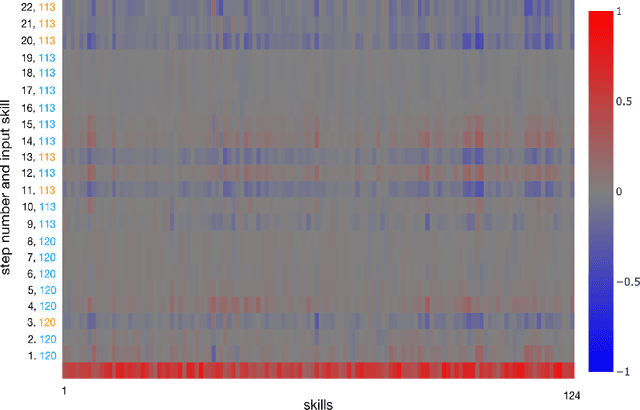

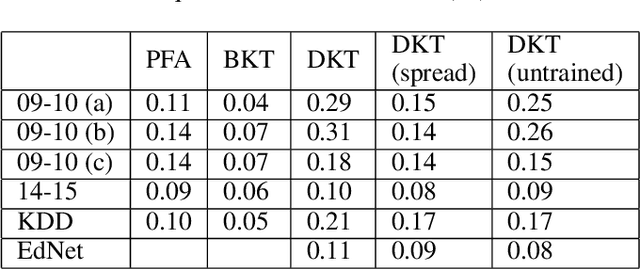

Knowledge tracing allows Intelligent Tutoring Systems to infer which topics or skills a student has mastered, thus adjusting curriculum accordingly. Deep Learning based models like Deep Knowledge Tracing (DKT) and Dynamic Key-Value Memory Network (DKVMN) have achieved significant improvements compared with models like Bayesian Knowledge Tracing (BKT) and Performance Factors Analysis (PFA). However, these deep learning based models are not as interpretable as other models because the decision-making process learned by deep neural networks is not wholly understood by the research community. In previous work, we critically examined the DKT model, visualizing and analyzing the behaviors of DKT in high dimensional space. In this work, we extend our original analyses with a much larger dataset and add discussions about the memory states of the DKVMN model. We discover that Deep Knowledge Tracing has some critical pitfalls: 1) instead of tracking each skill through time, DKT is more likely to learn an `ability' model; 2) the recurrent nature of DKT reinforces irrelevant information that it uses during the tracking task; 3) an untrained recurrent network can achieve similar results to a trained DKT model, supporting a conclusion that recurrence relations are not properly learned and, instead, improvements are simply a benefit of projection into a high dimensional, sparse vector space. Based on these observations, we propose improvements and future directions for conducting knowledge tracing research using deep neural network models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge