On the Implicit Bias Towards Minimal Depth of Deep Neural Networks

Paper and Code

Mar 12, 2022

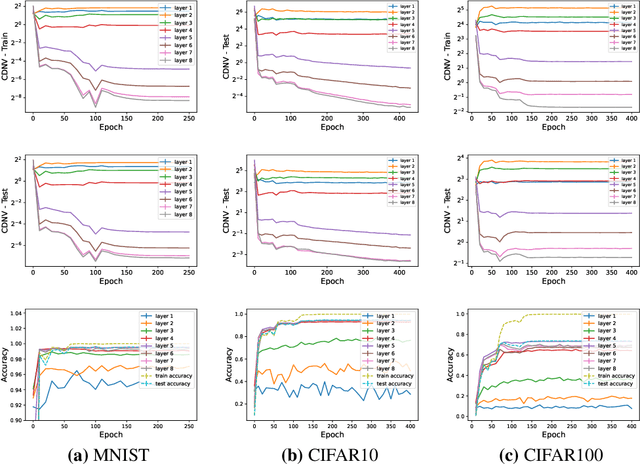

We study the implicit bias of gradient based training methods to favor low-depth solutions when training deep neural networks. Recent results in the literature suggest that penultimate layer representations learned by a classifier over multiple classes exhibit a clustering property, called neural collapse. We demonstrate empirically that the neural collapse property extends beyond the penultimate layer and tends to emerge in intermediate layers as well. In this regards, we hypothesize that gradient based methods are implicitly biased towards selecting neural networks of minimal depth for achieving this clustering property.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge