On the Effectiveness of Neural Ensembles for Image Classification with Small Datasets

Paper and Code

Nov 29, 2021

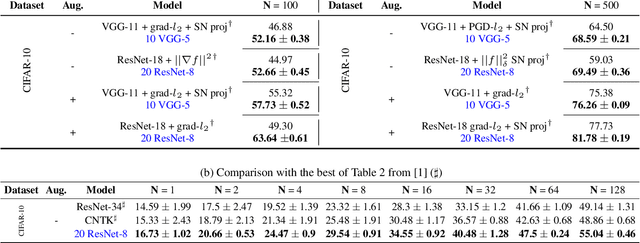

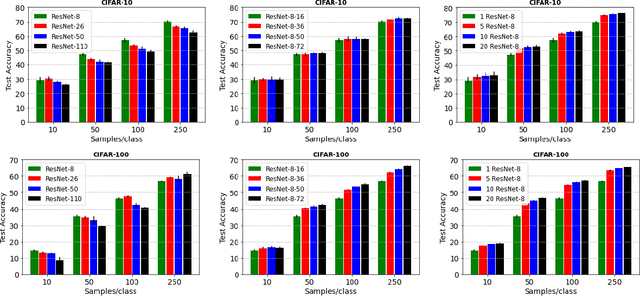

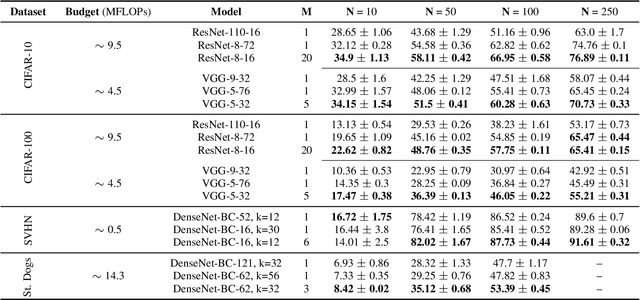

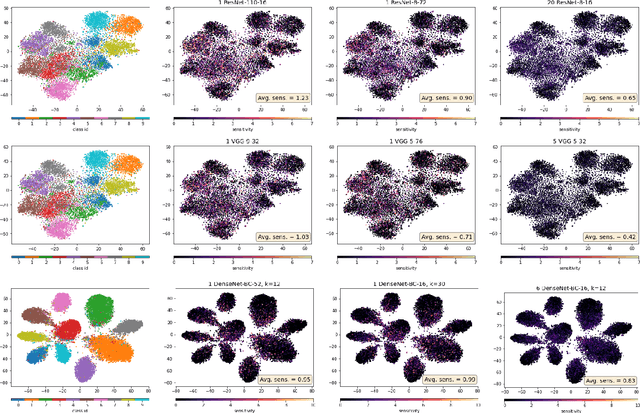

Deep neural networks represent the gold standard for image classification. However, they usually need large amounts of data to reach superior performance. In this work, we focus on image classification problems with a few labeled examples per class and improve data efficiency by using an ensemble of relatively small networks. For the first time, our work broadly studies the existing concept of neural ensembling in domains with small data, through extensive validation using popular datasets and architectures. We compare ensembles of networks to their deeper or wider single competitors given a total fixed computational budget. We show that ensembling relatively shallow networks is a simple yet effective technique that is generally better than current state-of-the-art approaches for learning from small datasets. Finally, we present our interpretation according to which neural ensembles are more sample efficient because they learn simpler functions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge