On the Convergence and generalization of Physics Informed Neural Networks

Paper and Code

Apr 03, 2020

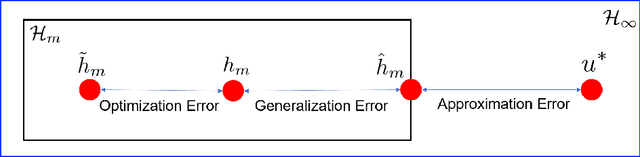

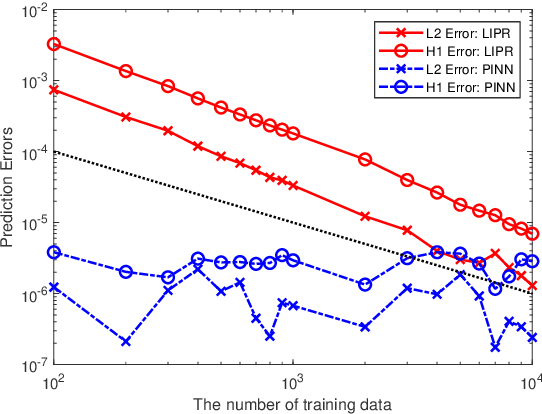

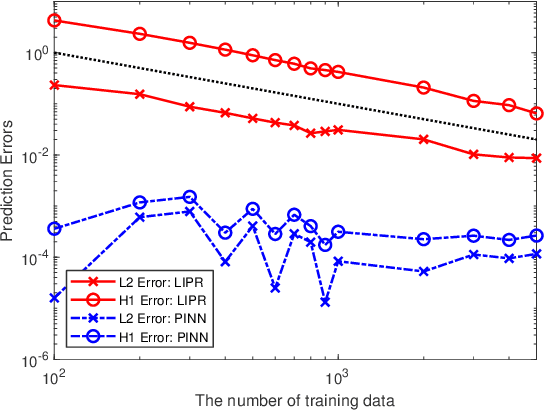

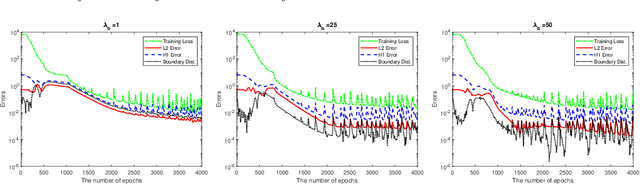

Physics informed neural networks (PINNs) are deep learning based techniques for solving partial differential equations (PDEs). Guided by data and physical laws, PINNs find a neural network that approximates the solution to a system of PDEs. Such a neural network is obtained by minimizing a loss function in which any prior knowledge of PDEs and data are encoded. Despite its remarkable empirical success, there is little theoretical justification for PINNs. In this paper, we establish a mathematical foundation of the PINNs methodology. As the number of data grows, PINNs generate a sequence of minimizers which correspond to a sequence of neural networks. We want to answer the question: Does the sequence of minimizers converge to the solution to the PDE? This question is also related to the generalization of PINNs. We consider two classes of PDEs: elliptic and parabolic. By adapting the Schuader approach, we show that the sequence of minimizers strongly converges to the PDE solution in $L^2$. Furthermore, we show that if each minimizer satisfies the initial/boundary conditions, the convergence mode can be improved to $H^1$. Computational examples are provided to illustrate our theoretical findings. To the best of our knowledge, this is the first theoretical work that shows the consistency of the PINNs methodology.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge