On the Arithmetic and Geometric Fusion of Beliefs for Distributed Inference

Paper and Code

Apr 28, 2022

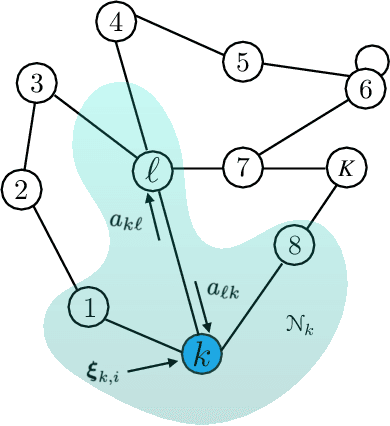

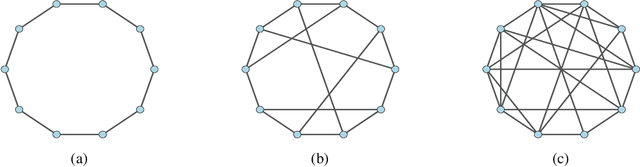

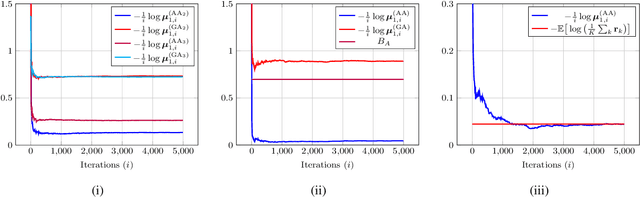

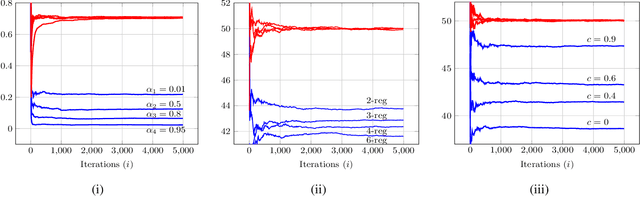

We study the asymptotic learning rates under linear and log-linear combination rules of belief vectors in a distributed hypothesis testing problem. We show that under both combination strategies, agents are able to learn the truth exponentially fast, with a faster rate under log-linear fusion. We examine the gap between the rates in terms of network connectivity and information diversity. We also provide closed-form expressions for special cases involving federated architectures and exchangeable networks.

* Submitted for publication

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge