On the Adversarial Robustness of Causal Algorithmic Recourse

Paper and Code

Dec 21, 2021

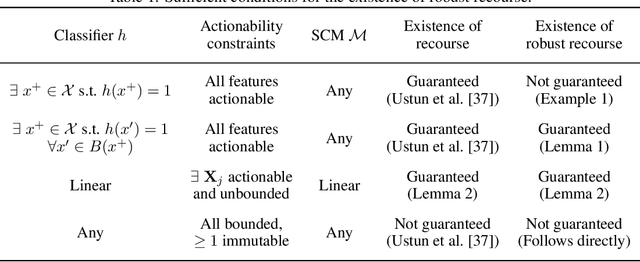

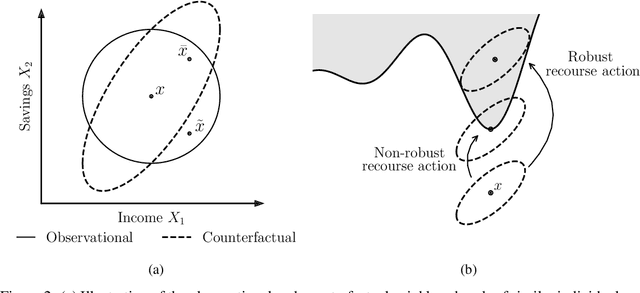

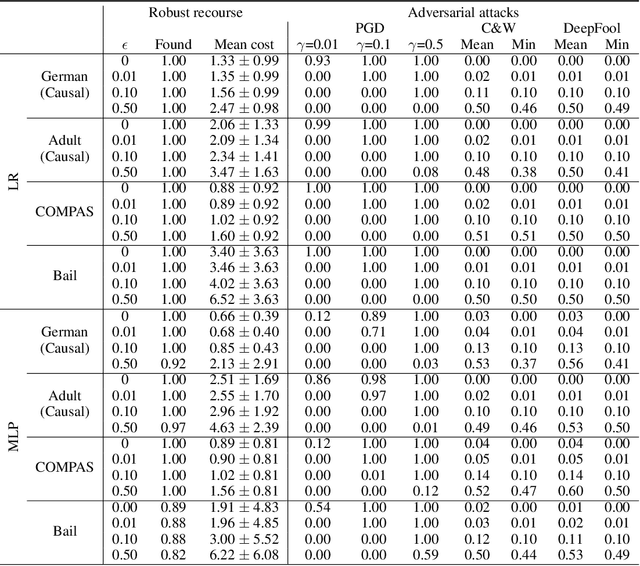

Algorithmic recourse seeks to provide actionable recommendations for individuals to overcome unfavorable outcomes made by automated decision-making systems. Recourse recommendations should ideally be robust to reasonably small uncertainty in the features of the individual seeking recourse. In this work, we formulate the adversarially robust recourse problem and show that recourse methods offering minimally costly recourse fail to be robust. We then present methods for generating adversarially robust recourse in the linear and in the differentiable case. To ensure that recourse is robust, individuals are asked to make more effort than they would have otherwise had to. In order to shift part of the burden of robustness from the decision-subject to the decision-maker, we propose a model regularizer that encourages the additional cost of seeking robust recourse to be low. We show that classifiers trained with our proposed model regularizer, which penalizes relying on unactionable features for prediction, offer potentially less effortful recourse.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge