On Synchronization of Wireless Acoustic Sensor Networks in the Presence of Time-varying Sampling Rate Offsets and Speaker Changes

Paper and Code

Oct 25, 2021

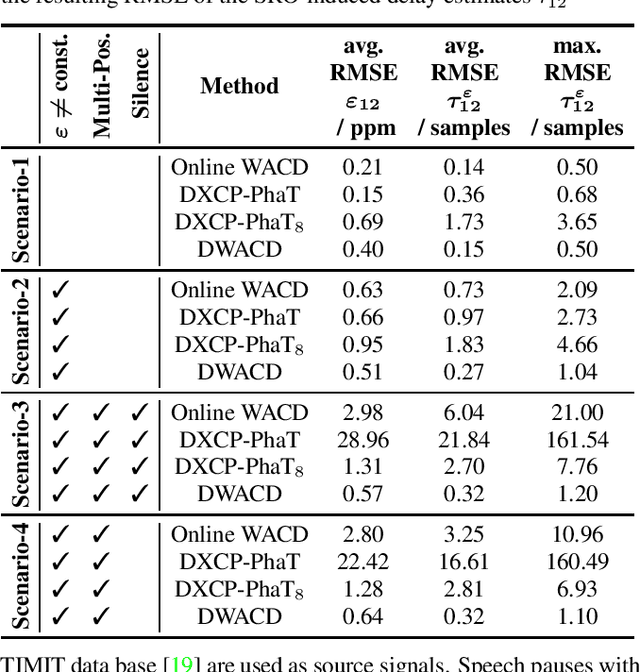

A wireless acoustic sensor network records audio signals with sampling time and sampling rate offsets between the audio streams, if the analog-digital converters (ADCs) of the network devices are not synchronized. Here, we introduce a new sampling rate offset model to simulate time-varying sampling frequencies caused, for example, by temperature changes of ADC crystal oscillators, and propose an estimation algorithm to handle this dynamic aspect in combination with changing acoustic source positions. Furthermore, we show how deep neural network based estimates of the distances between microphones and human speakers can be used to determine the sampling time offsets. This enables a synchronization of the audio streams to reflect the physical time differences of flight.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge