On Representational Dissociation of Language and Arithmetic in Large Language Models

Paper and Code

Feb 17, 2025

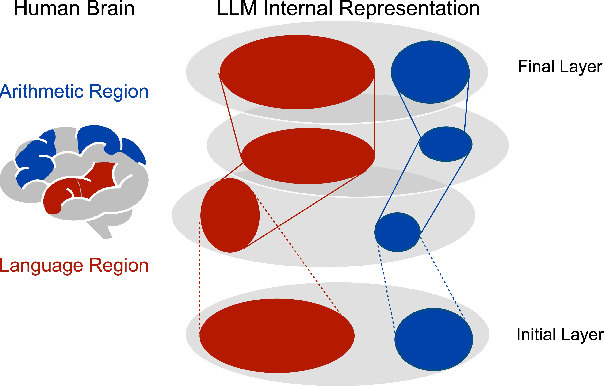

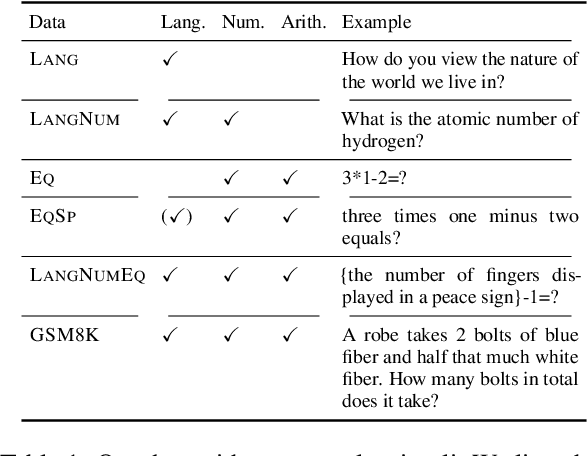

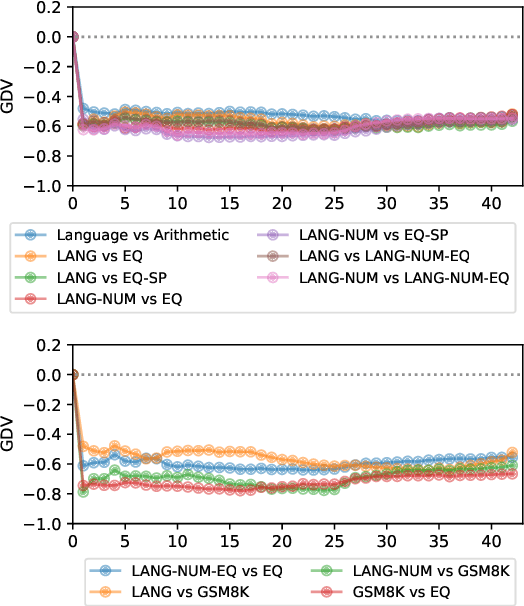

The association between language and (non-linguistic) thinking ability in humans has long been debated, and recently, neuroscientific evidence of brain activity patterns has been considered. Such a scientific context naturally raises an interdisciplinary question -- what about such a language-thought dissociation in large language models (LLMs)? In this paper, as an initial foray, we explore this question by focusing on simple arithmetic skills (e.g., $1+2=$ ?) as a thinking ability and analyzing the geometry of their encoding in LLMs' representation space. Our experiments with linear classifiers and cluster separability tests demonstrate that simple arithmetic equations and general language input are encoded in completely separated regions in LLMs' internal representation space across all the layers, which is also supported with more controlled stimuli (e.g., spelled-out equations). These tentatively suggest that arithmetic reasoning is mapped into a distinct region from general language input, which is in line with the neuroscientific observations of human brain activations, while we also point out their somewhat cognitively implausible geometric properties.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge