On Memorization in Probabilistic Deep Generative Models

Paper and Code

Jun 06, 2021

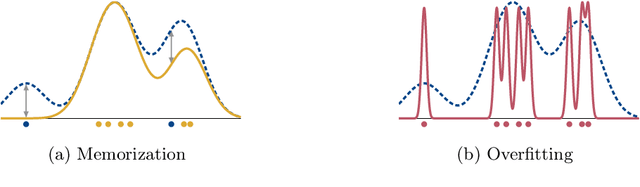

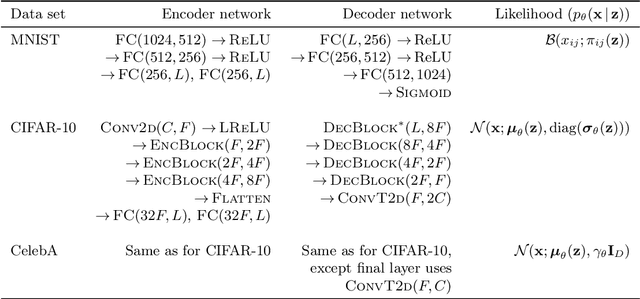

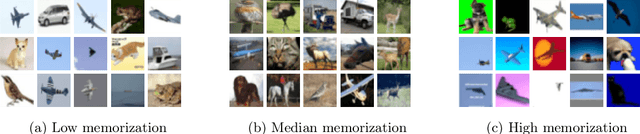

Recent advances in deep generative models have led to impressive results in a variety of application domains. Motivated by the possibility that deep learning models might memorize part of the input data, there have been increased efforts to understand how memorization can occur. In this work, we extend a recently proposed measure of memorization for supervised learning (Feldman, 2019) to the unsupervised density estimation problem and simplify the accompanying estimator. Next, we present an exploratory study that demonstrates how memorization can arise in probabilistic deep generative models, such as variational autoencoders. This reveals that the form of memorization to which these models are susceptible differs fundamentally from mode collapse and overfitting. Finally, we discuss several strategies that can be used to limit memorization in practice.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge