On Linear Convergence in Smooth Convex-Concave Bilinearly-Coupled Saddle-Point Optimization: Lower Bounds and Optimal Algorithms

Paper and Code

Nov 21, 2024

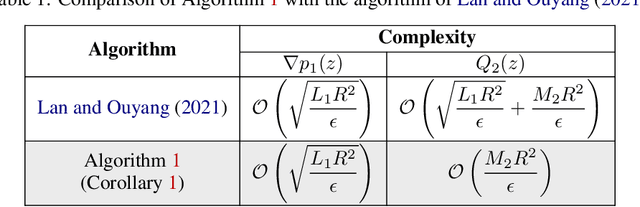

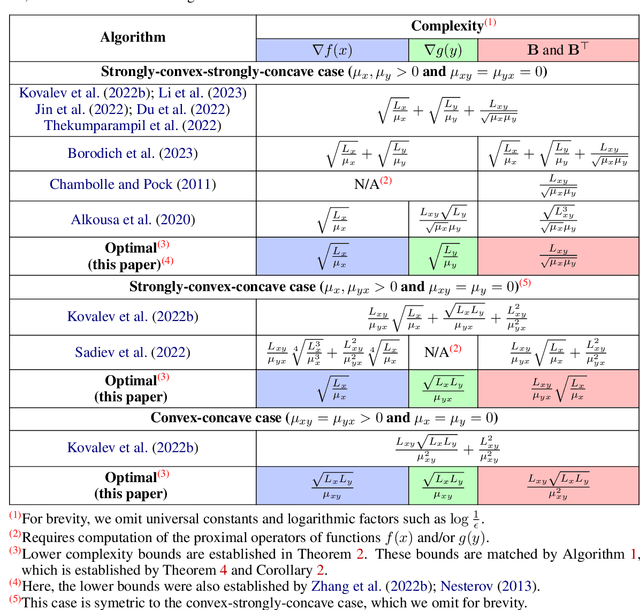

We revisit the smooth convex-concave bilinearly-coupled saddle-point problem of the form $\min_x\max_y f(x) + \langle y,\mathbf{B} x\rangle - g(y)$. In the highly specific case where each of the functions $f(x)$ and $g(y)$ is either affine or strongly convex, there exist lower bounds on the number of gradient evaluations and matrix-vector multiplications required to solve the problem, as well as matching optimal algorithms. A notable aspect of these algorithms is that they are able to attain linear convergence, i.e., the number of iterations required to solve the problem is proportional to $\log(1/\epsilon)$. However, the class of bilinearly-coupled saddle-point problems for which linear convergence is possible is much wider and can involve smooth non-strongly convex functions $f(x)$ and $g(y)$. Therefore, we develop the first lower complexity bounds and matching optimal linearly converging algorithms for this problem class. Our lower complexity bounds are much more general, but they cover and unify the existing results in the literature. On the other hand, our algorithm implements the separation of complexities, which, for the first time, enables the simultaneous achievement of both optimal gradient evaluation and matrix-vector multiplication complexities, resulting in the best theoretical performance to date.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge