On Exploiting Hitting Sets for Model Reconciliation

Paper and Code

Dec 16, 2020

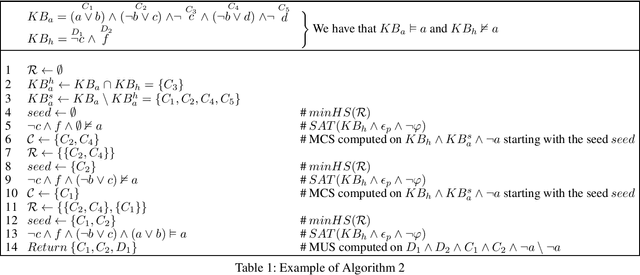

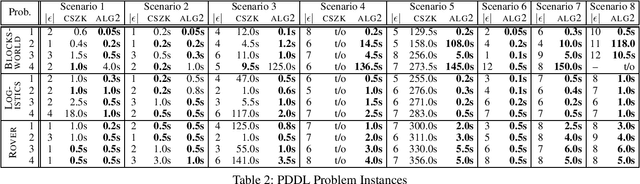

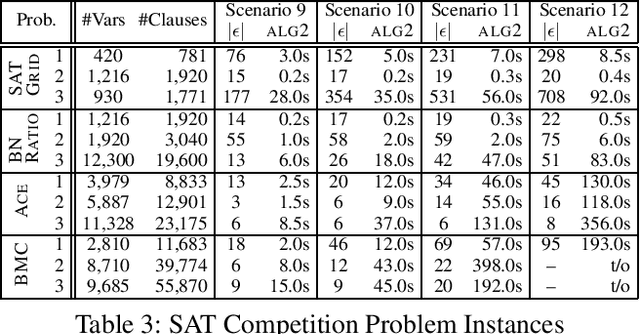

In human-aware planning, a planning agent may need to provide an explanation to a human user on why its plan is optimal. A popular approach to do this is called model reconciliation, where the agent tries to reconcile the differences in its model and the human's model such that the plan is also optimal in the human's model. In this paper, we present a logic-based framework for model reconciliation that extends beyond the realm of planning. More specifically, given a knowledge base $KB_1$ entailing a formula $\varphi$ and a second knowledge base $KB_2$ not entailing it, model reconciliation seeks an explanation, in the form of a cardinality-minimal subset of $KB_1$, whose integration into $KB_2$ makes the entailment possible. Our approach, based on ideas originating in the context of analysis of inconsistencies, exploits the existing hitting set duality between minimal correction sets (MCSes) and minimal unsatisfiable sets (MUSes) in order to identify an appropriate explanation. However, differently from those works targeting inconsistent formulas, which assume a single knowledge base, MCSes and MUSes are computed over two distinct knowledge bases. We conclude our paper with an empirical evaluation of the newly introduced approach on planning instances, where we show how it outperforms an existing state-of-the-art solver, and generic non-planning instances from recent SAT competitions, for which no other solver exists.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge