On confidence intervals for precision matrices and the eigendecomposition of covariance matrices

Paper and Code

Aug 25, 2022

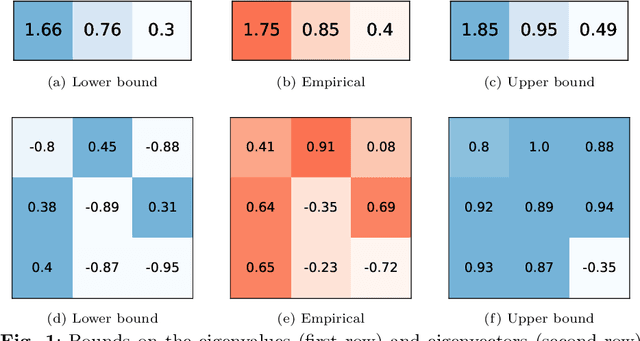

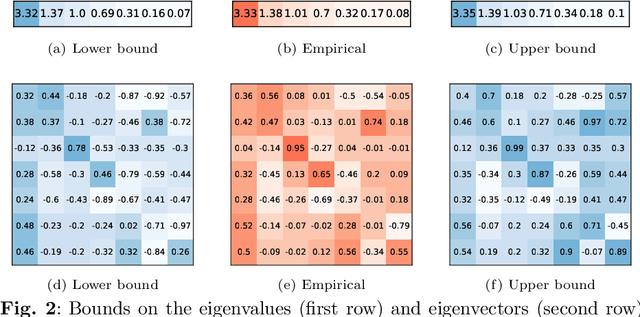

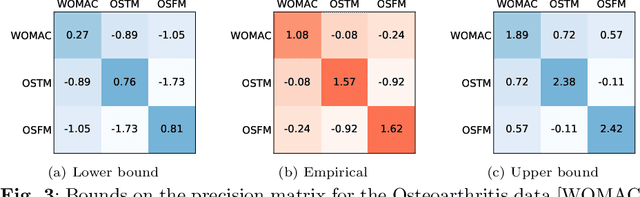

The eigendecomposition of a matrix is the central procedure in probabilistic models based on matrix factorization, for instance principal component analysis and topic models. Quantifying the uncertainty of such a decomposition based on a finite sample estimate is essential to reasoning under uncertainty when employing such models. This paper tackles the challenge of computing confidence bounds on the individual entries of eigenvectors of a covariance matrix of fixed dimension. Moreover, we derive a method to bound the entries of the inverse covariance matrix, the so-called precision matrix. The assumptions behind our method are minimal and require that the covariance matrix exists, and its empirical estimator converges to the true covariance. We make use of the theory of U-statistics to bound the $L_2$ perturbation of the empirical covariance matrix. From this result, we obtain bounds on the eigenvectors using Weyl's theorem and the eigenvalue-eigenvector identity and we derive confidence intervals on the entries of the precision matrix using matrix inversion perturbation bounds. As an application of these results, we demonstrate a new statistical test, which allows us to test for non-zero values of the precision matrix. We compare this test to the well-known Fisher-z test for partial correlations, and demonstrate the soundness and scalability of the proposed statistical test, as well as its application to real-world data from medical and physics domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge