Offline Reinforcement Learning with Soft Behavior Regularization

Paper and Code

Oct 14, 2021

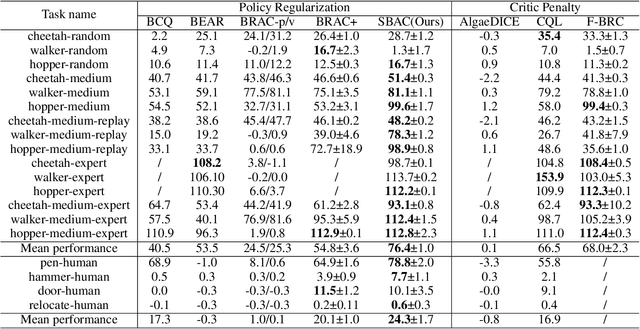

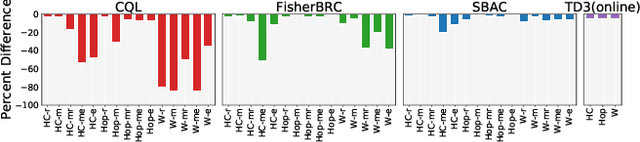

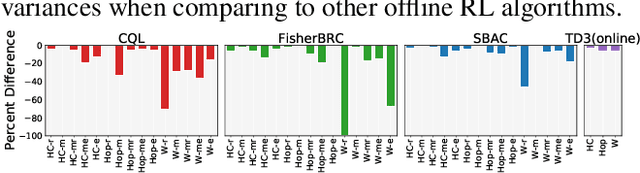

Most prior approaches to offline reinforcement learning (RL) utilize \textit{behavior regularization}, typically augmenting existing off-policy actor critic algorithms with a penalty measuring divergence between the policy and the offline data. However, these approaches lack guaranteed performance improvement over the behavior policy. In this work, we start from the performance difference between the learned policy and the behavior policy, we derive a new policy learning objective that can be used in the offline setting, which corresponds to the advantage function value of the behavior policy, multiplying by a state-marginal density ratio. We propose a practical way to compute the density ratio and demonstrate its equivalence to a state-dependent behavior regularization. Unlike state-independent regularization used in prior approaches, this \textit{soft} regularization allows more freedom of policy deviation at high confidence states, leading to better performance and stability. We thus term our resulting algorithm Soft Behavior-regularized Actor Critic (SBAC). Our experimental results show that SBAC matches or outperforms the state-of-the-art on a set of continuous control locomotion and manipulation tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge