Offline Decentralized Multi-Agent Reinforcement Learning

Paper and Code

Aug 04, 2021

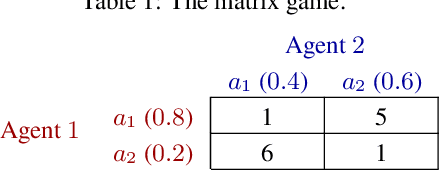

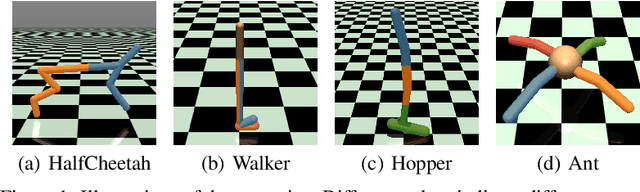

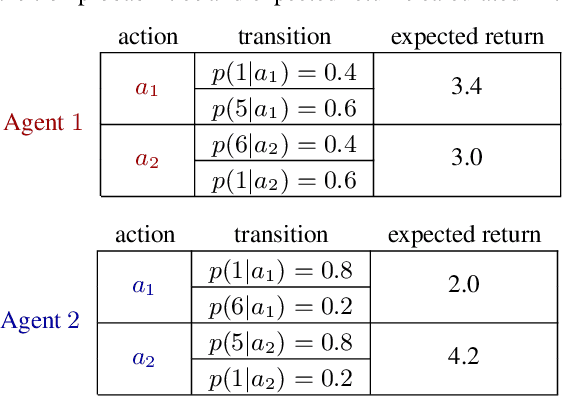

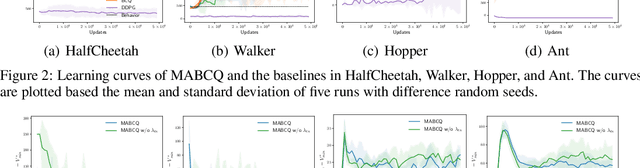

In many real-world multi-agent cooperative tasks, due to high cost and risk, agents cannot interact with the environment and collect experiences during learning, but have to learn from offline datasets. However, the transition probabilities calculated from the dataset can be much different from the transition probabilities induced by the learned policies of other agents, creating large errors in value estimates. Moreover, the experience distributions of agents' datasets may vary wildly due to diverse behavior policies, causing large difference in value estimates between agents. Consequently, agents will learn uncoordinated suboptimal policies. In this paper, we propose MABCQ, which exploits value deviation and transition normalization to modify the transition probabilities. Value deviation optimistically increases the transition probabilities of high-value next states, and transition normalization normalizes the biased transition probabilities of next states. They together encourage agents to discover potential optimal and coordinated policies. Mathematically, we prove the convergence of Q-learning under the non-stationary transition probabilities after modification. Empirically, we show that MABCQ greatly outperforms baselines and reduces the difference in value estimates between agents.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge