Observability Analysis of Aided INS with Heterogeneous Features of Points, Lines and Planes

Paper and Code

May 12, 2018

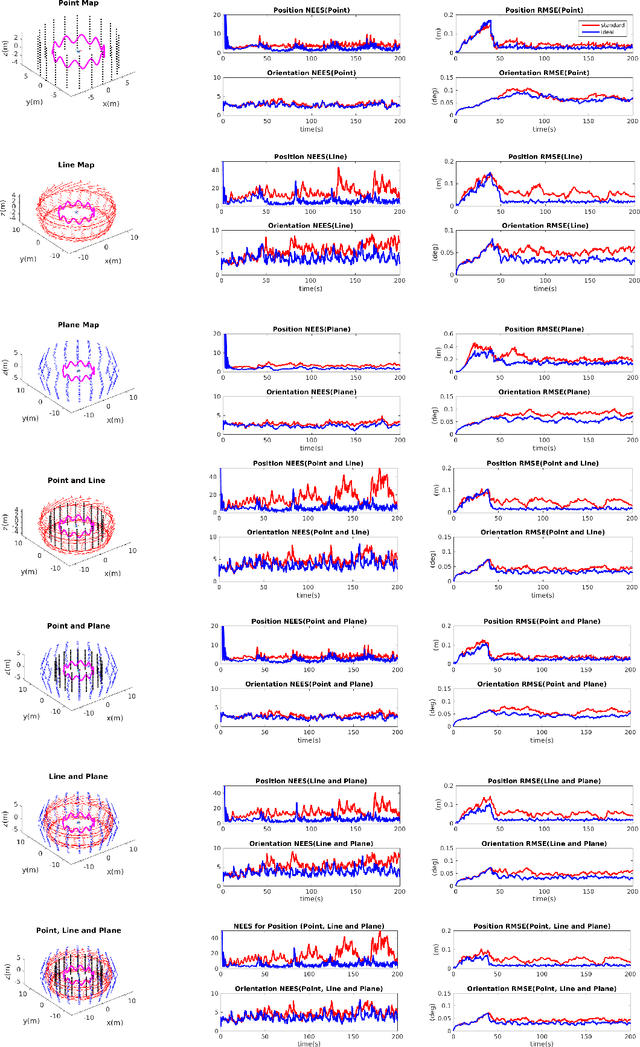

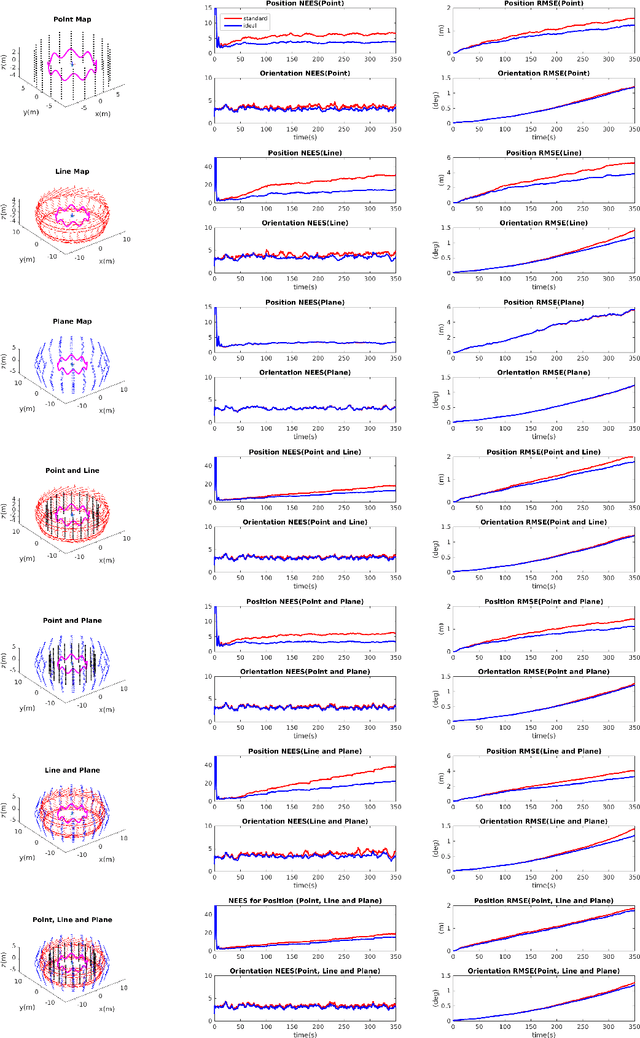

In this paper, we perform a thorough observability analysis for linearized inertial navigation systems (INS) aided by exteroceptive range and/or bearing sensors (such as cameras, LiDAR and sonars) with different geometric features (points, lines and planes). While the observability of vision-aided INS (VINS) with point features has been extensively studied in the literature, we analytically show that the general aided INS with point features preserves the same observability property: that is, 4 unobservable directions, corresponding to the global yaw and the global position of the sensor platform. We further prove that there are at least 5 (and 7) unobservable directions for the linearized aided INS with a single line (and plane) feature; and, for the first time, analytically derive the unobservable subspace for the case of multiple lines/planes. Building upon this, we examine the system observability of the linearized aided INS with different combinations of points, lines and planes, and show that, in general, the system preserves at least 4 unobservable directions, while if global measurements are available, as expected, some unobservable directions diminish. In particular, when using plane features, we propose to use a minimal, closest point (CP) representation; and we also study in-depth the effects of 5 degenerate motions identified on observability. To numerically validate our analysis, we develop and evaluate both EKF-based visual-inertial SLAM and visual-inertial odometry (VIO) using heterogeneous geometric features in Monte Carlo simulations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge