Not All are Made Equal: Consistency of Weighted Averaging Estimators Under Active Learning

Paper and Code

Oct 11, 2019

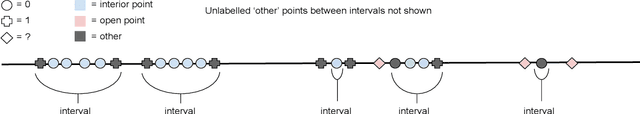

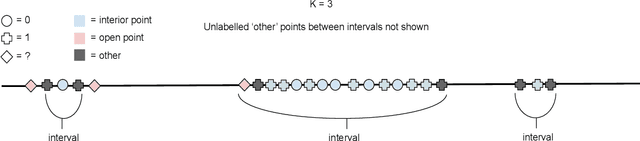

Active learning seeks to build the best possible model with a budget of labelled data by sequentially selecting the next point to label. However the training set is no longer \textit{iid}, violating the conditions required by existing consistency results. Inspired by the success of Stone's Theorem we aim to regain consistency for weighted averaging estimators under active learning. Based on ideas in \citet{dasgupta2012consistency}, our approach is to enforce a small amount of random sampling by running an augmented version of the underlying active learning algorithm. We generalize Stone's Theorem in the noise free setting, proving consistency for well known classifiers such as $k$-NN, histogram and kernel estimators under conditions which mirror classical results. However in the presence of noise we can no longer deal with these estimators in a unified manner; for some satisfying this condition also guarantees sufficiency in the noisy case, while for others we can achieve near perfect inconsistency while this condition holds. Finally we provide conditions for consistency in the presence of noise, which give insight into why these estimators can behave so differently under the combination of noise and active learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge