Nonprehensile Riemannian Motion Predictive Control

Paper and Code

Nov 15, 2021

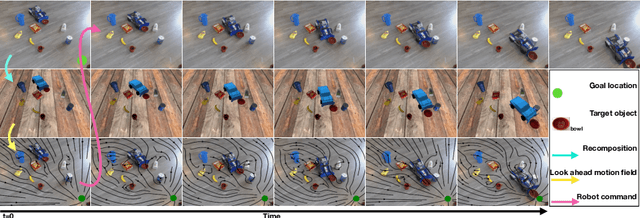

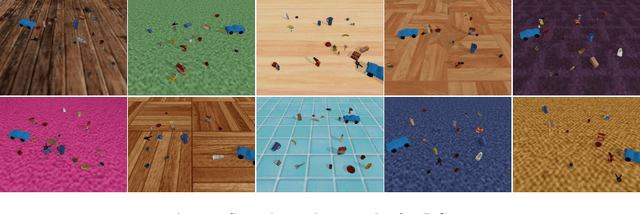

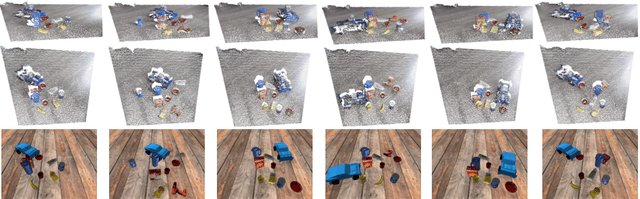

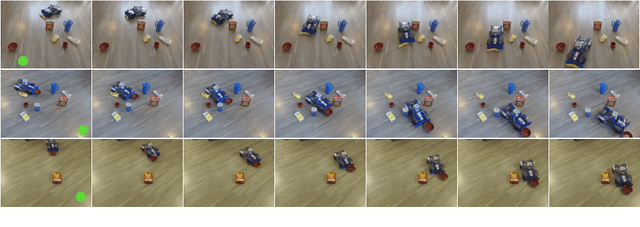

Nonprehensile manipulation involves long horizon underactuated object interactions and physical contact with different objects that can inherently introduce a high degree of uncertainty. In this work, we introduce a novel Real-to-Sim reward analysis technique, called Riemannian Motion Predictive Control (RMPC), to reliably imagine and predict the outcome of taking possible actions for a real robotic platform. Our proposed RMPC benefits from Riemannian motion policy and second order dynamic model to compute the acceleration command and control the robot at every location on the surface. Our approach creates a 3D object-level recomposed model of the real scene where we can simulate the effect of different trajectories. We produce a closed-loop controller to reactively push objects in a continuous action space. We evaluate the performance of our RMPC approach by conducting experiments on a real robot platform as well as simulation and compare against several baselines. We observe that RMPC is robust in cluttered as well as occluded environments and outperforms the baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge