Nonparametric Variable Screening with Optimal Decision Stumps

Paper and Code

Nov 05, 2020

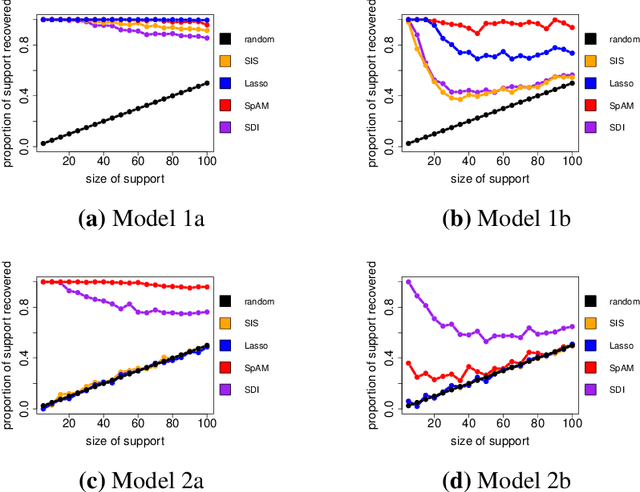

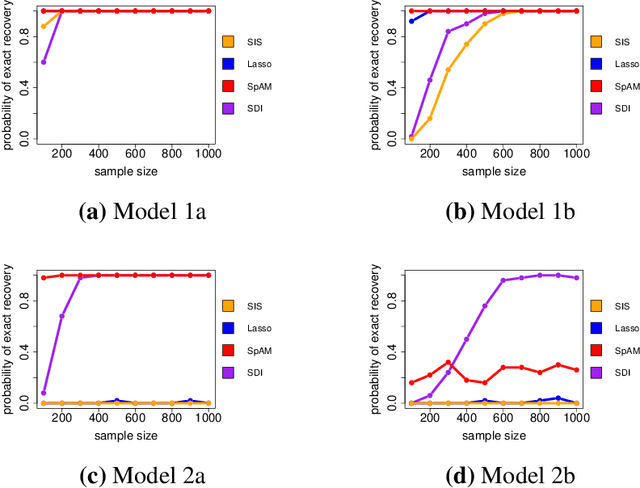

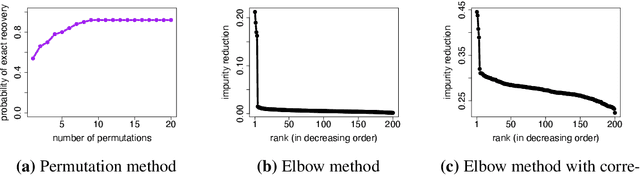

Decision trees and their ensembles are endowed with a rich set of diagnostic tools for ranking and screening input variables in a predictive model. One of the most commonly used in practice is the Mean Decrease in Impurity (MDI), which calculates an importance score for a variable by summing the weighted impurity reductions over all non-terminal nodes split with that variable. Despite the widespread use of tree based variable importance measures such as MDI, pinning down their theoretical properties has been challenging and therefore largely unexplored. To address this gap between theory and practice, we derive rigorous finite sample performance guarantees for variable ranking and selection in nonparametric models with MDI for a single-level CART decision tree (decision stump). We find that the marginal signal strength of each variable and ambient dimensionality can be considerably weaker and higher, respectively, than state-of-the-art nonparametric variable selection methods. Furthermore, unlike previous marginal screening methods that attempt to directly estimate each marginal projection via a truncated basis expansion, the fitted model used here is a simple, parsimonious decision stump, thereby eliminating the need for tuning the number of basis terms. Thus, surprisingly, even though decision stumps are highly inaccurate for estimation purposes, they can still be used to perform consistent model selection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge