Nonconvex Demixing From Bilinear Measurements

Paper and Code

Sep 19, 2018

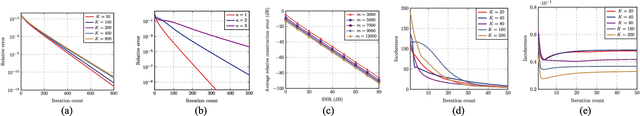

We consider the problem of demixing a sequence of source signals from the sum of noisy bilinear measurements. It is a generalized mathematical model for blind demixing with blind deconvolution, which is prevalent across the areas of dictionary learning, image processing, and communications. However, state-of- the-art convex methods for blind demixing via semidefinite programming are computationally infeasible for large-scale problems. Although the existing nonconvex algorithms are able to address the scaling issue, they normally require proper regularization to establish optimality guarantees. The additional regularization yields tedious algorithmic parameters and pessimistic convergence rates with conservative step sizes. To address the limitations of existing methods, we thus develop a provable nonconvex demixing procedure viaWirtinger flow, much like vanilla gradient descent, to harness the benefits of regularization-free fast convergence rate with aggressive step size and computational optimality guarantees. This is achieved by exploiting the benign geometry of the blind demixing problem, thereby revealing that Wirtinger flow enforces the regularization-free iterates in the region of strong convexity and qualified level of smoothness, where the step size can be chosen aggressively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge