Noisy Softplus: an activation function that enables SNNs to be trained as ANNs

Paper and Code

Mar 31, 2017

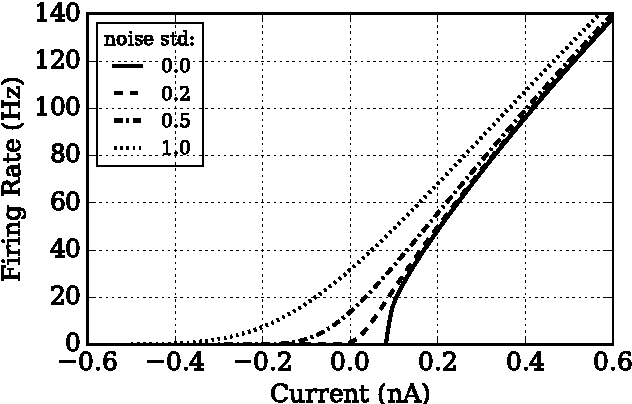

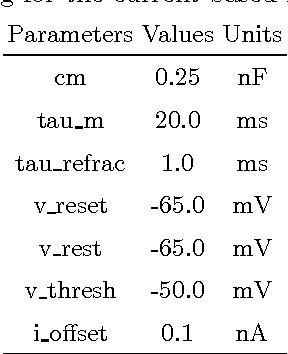

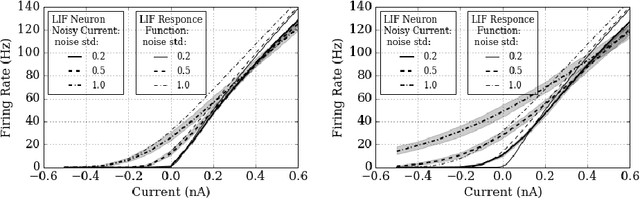

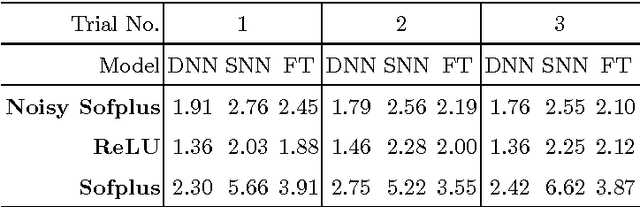

We extended the work of proposed activation function, Noisy Softplus, to fit into training of layered up spiking neural networks (SNNs). Thus, any ANN employing Noisy Softplus neurons, even of deep architecture, can be trained simply by the traditional algorithm, for example Back Propagation (BP), and the trained weights can be directly used in the spiking version of the same network without any conversion. Furthermore, the training method can be generalised to other activation units, for instance Rectified Linear Units (ReLU), to train deep SNNs off-line. This research is crucial to provide an effective approach for SNN training, and to increase the classification accuracy of SNNs with biological characteristics and to close the gap between the performance of SNNs and ANNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge