Noise Injection as a Probe of Deep Learning Dynamics

Paper and Code

Oct 24, 2022

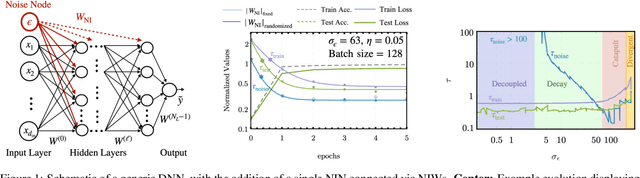

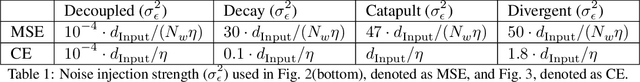

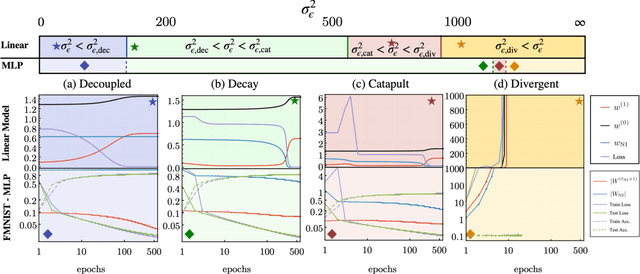

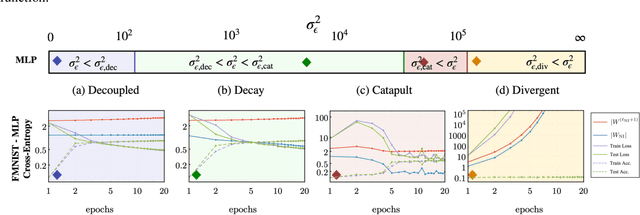

We propose a new method to probe the learning mechanism of Deep Neural Networks (DNN) by perturbing the system using Noise Injection Nodes (NINs). These nodes inject uncorrelated noise via additional optimizable weights to existing feed-forward network architectures, without changing the optimization algorithm. We find that the system displays distinct phases during training, dictated by the scale of injected noise. We first derive expressions for the dynamics of the network and utilize a simple linear model as a test case. We find that in some cases, the evolution of the noise nodes is similar to that of the unperturbed loss, thus indicating the possibility of using NINs to learn more about the full system in the future.

* 11 pages, 3 figures

View paper on

OpenReview

OpenReview

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge