Nipping in the Bud: Detection, Diffusion and Mitigation of Hate Speech on Social Media

Paper and Code

Jan 04, 2022

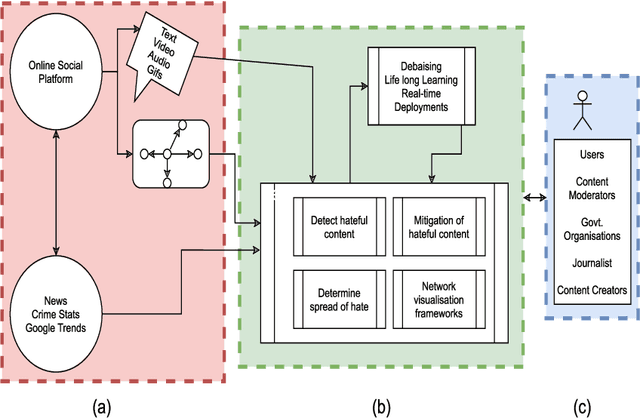

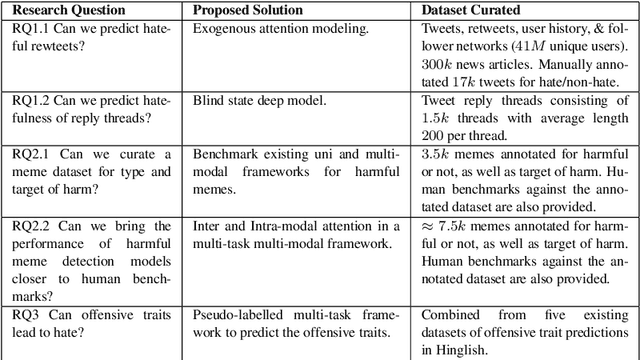

Since the proliferation of social media usage, hate speech has become a major crisis. Hateful content can spread quickly and create an environment of distress and hostility. Further, what can be considered hateful is contextual and varies with time. While online hate speech reduces the ability of already marginalised groups to participate in discussion freely, offline hate speech leads to hate crimes and violence against individuals and communities. The multifaceted nature of hate speech and its real-world impact have already piqued the interest of the data mining and machine learning communities. Despite our best efforts, hate speech remains an evasive issue for researchers and practitioners alike. This article presents methodological challenges that hinder building automated hate mitigation systems. These challenges inspired our work in the broader area of combating hateful content on the web. We discuss a series of our proposed solutions to limit the spread of hate speech on social media.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge