New Steps on the Exact Learning of CNF

Paper and Code

Sep 10, 2016

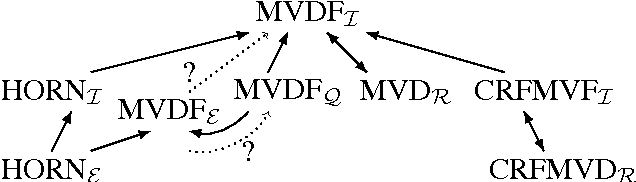

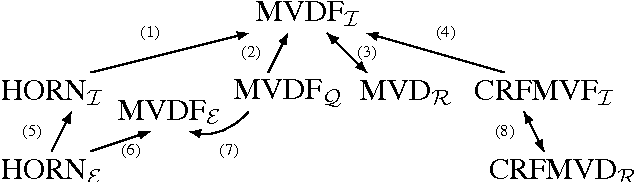

A major problem in computational learning theory is whether the class of formulas in conjunctive normal form (CNF) is efficiently learnable. Although it is known that this class cannot be polynomially learned using either membership or equivalence queries alone, it is open whether CNF can be polynomially learned using both types of queries. One of the most important results concerning a restriction of the class CNF is that propositional Horn formulas are polynomial time learnable in Angluin's exact learning model with membership and equivalence queries. In this work we push this boundary and show that the class of multivalued dependency formulas (MVDF) is polynomially learnable from interpretations. We then provide a notion of reduction between learning problems in Angluin's model, showing that a transformation of the algorithm suffices to efficiently learn multivalued database dependencies from data relations. We also show via reductions that our main result extends well known previous results and allows us to find alternative solutions for them.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge