Never a Dull Moment: Distributional Properties as a Baseline for Time-Series Classification

Paper and Code

Mar 31, 2023

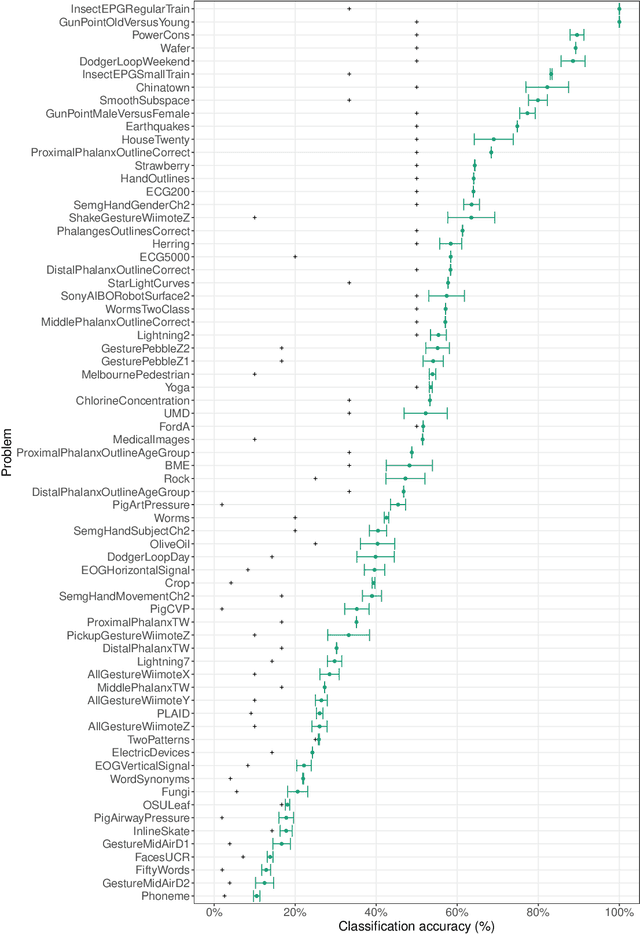

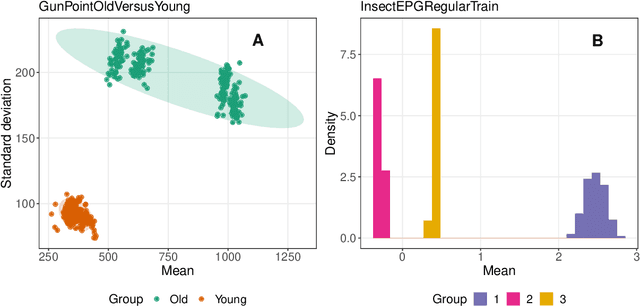

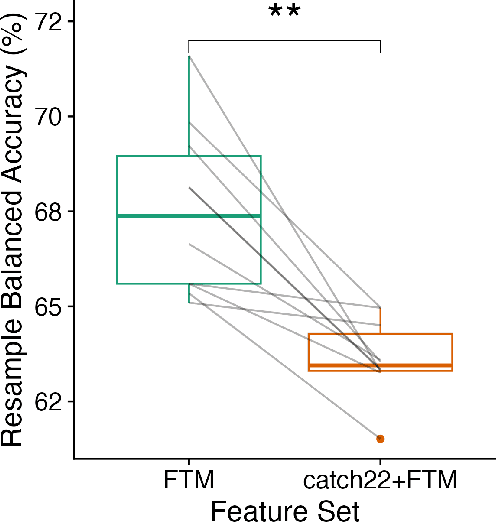

The variety of complex algorithmic approaches for tackling time-series classification problems has grown considerably over the past decades, including the development of sophisticated but challenging-to-interpret deep-learning-based methods. But without comparison to simpler methods it can be difficult to determine when such complexity is required to obtain strong performance on a given problem. Here we evaluate the performance of an extremely simple classification approach -- a linear classifier in the space of two simple features that ignore the sequential ordering of the data: the mean and standard deviation of time-series values. Across a large repository of 128 univariate time-series classification problems, this simple distributional moment-based approach outperformed chance on 69 problems, and reached 100% accuracy on two problems. With a neuroimaging time-series case study, we find that a simple linear model based on the mean and standard deviation performs better at classifying individuals with schizophrenia than a model that additionally includes features of the time-series dynamics. Comparing the performance of simple distributional features of a time series provides important context for interpreting the performance of complex time-series classification models, which may not always be required to obtain high accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge