Neurosymbolic Graph Enrichment for Grounded World Models

Paper and Code

Nov 19, 2024

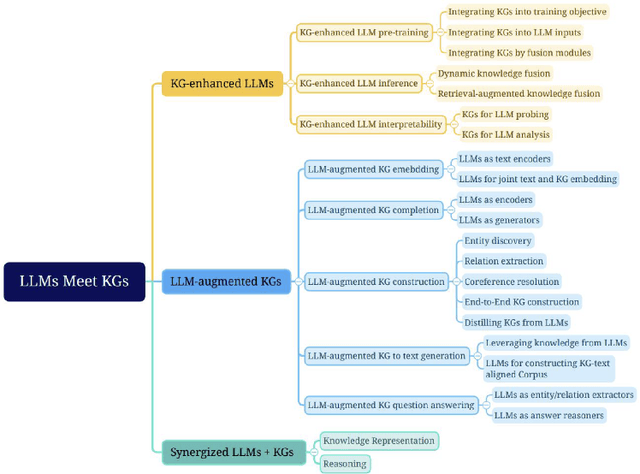

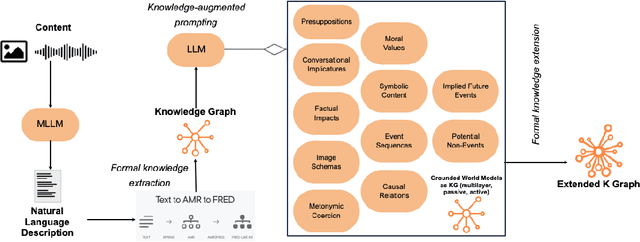

The development of artificial intelligence systems capable of understanding and reasoning about complex real-world scenarios is a significant challenge. In this work we present a novel approach to enhance and exploit LLM reactive capability to address complex problems and interpret deeply contextual real-world meaning. We introduce a method and a tool for creating a multimodal, knowledge-augmented formal representation of meaning that combines the strengths of large language models with structured semantic representations. Our method begins with an image input, utilizing state-of-the-art large language models to generate a natural language description. This description is then transformed into an Abstract Meaning Representation (AMR) graph, which is formalized and enriched with logical design patterns, and layered semantics derived from linguistic and factual knowledge bases. The resulting graph is then fed back into the LLM to be extended with implicit knowledge activated by complex heuristic learning, including semantic implicatures, moral values, embodied cognition, and metaphorical representations. By bridging the gap between unstructured language models and formal semantic structures, our method opens new avenues for tackling intricate problems in natural language understanding and reasoning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge