NeuralTailor: Reconstructing Sewing Pattern Structures from 3D Point Clouds of Garments

Paper and Code

Jan 31, 2022

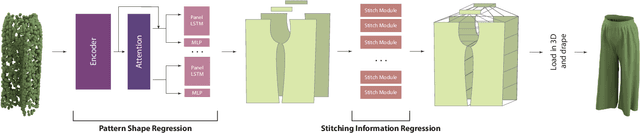

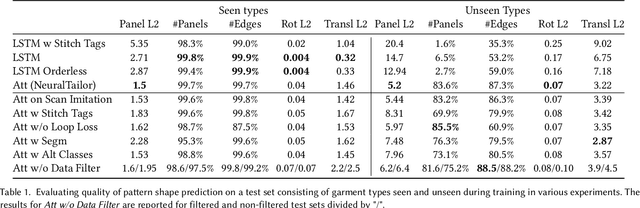

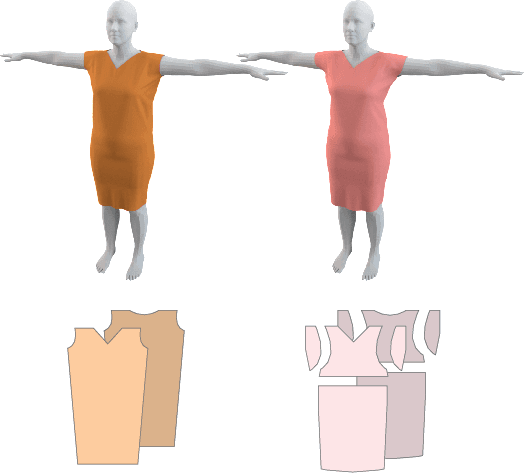

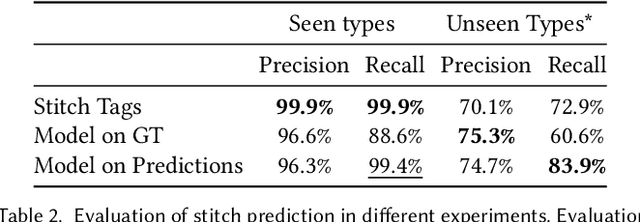

The fields of SocialVR, performance capture, and virtual try-on are often faced with a need to faithfully reproduce real garments in the virtual world. One critical task is the disentanglement of the intrinsic garment shape from deformations due to fabric properties, physical forces, and contact with the body. We propose to use a garment sewing pattern, a realistic and compact garment descriptor, to facilitate the intrinsic garment shape estimation. Another major challenge is a high diversity of shapes and designs in the domain. The most common approach for Deep Learning on 3D garments is to build specialized models for individual garments or garment types. We argue that building a unified model for various garment designs has the benefit of generalization to novel garment types, hence covering a larger design domain than individual models would. We introduce NeuralTailor, a novel architecture based on point-level attention for set regression with variable cardinality, and apply it to the task of reconstructing 2D garment sewing patterns from the 3D point could garment models. Our experiments show that NeuralTailor successfully reconstructs sewing patterns and generalizes to garment types with pattern topologies unseen during training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge