Neurally Plausible Model of Robot Reaching Inspired by Infant Motor Babbling

Paper and Code

Dec 31, 2017

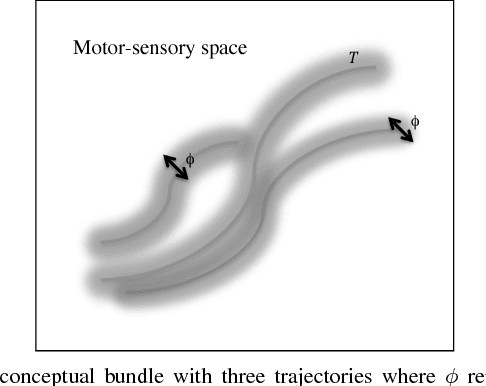

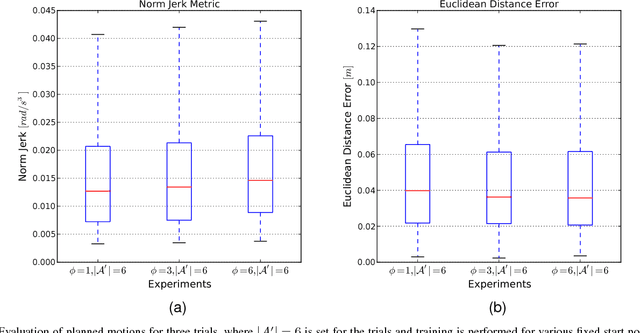

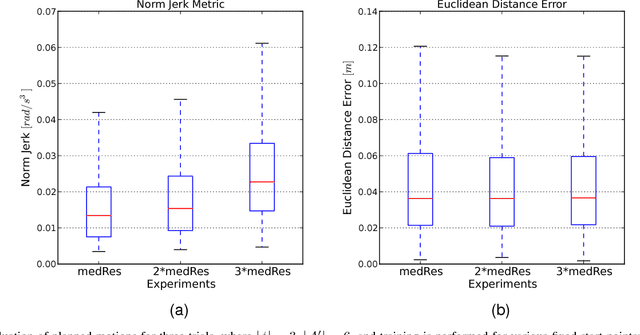

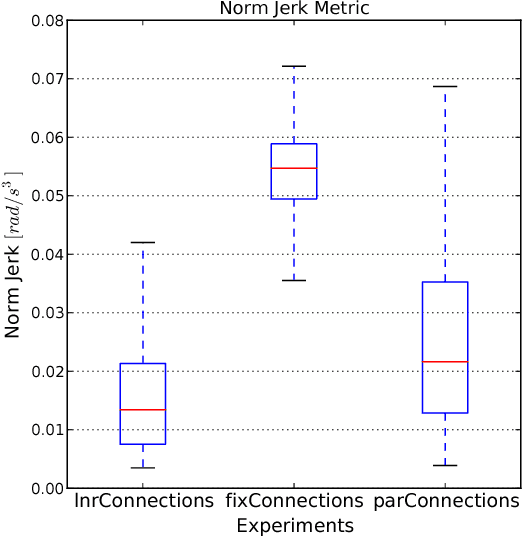

In this paper we present a neurally plausible model of robot reaching inspired by human infant reaching that is based on embodied artificial intelligence, which emphasizes the importance of the sensory-motor interaction of an agent and the world. This model encompasses both learning sensory-motor correlations through motor babbling and also arm motion planning using spreading activation. This model is organized in three layers of neural maps with parallel structures representing the same sensory-motor space. The motor babbling period shapes the structure of the three neural maps as well as the connections within and between them. We describe an implementation of this model and an investigation of this implementation using a simple reaching task on a humanoid robot. The robot has learned successfully to plan reaching motions from a test set with high accuracy and smoothness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge