Neural Transfer Learning for Cry-based Diagnosis of Perinatal Asphyxia

Paper and Code

Jul 02, 2019

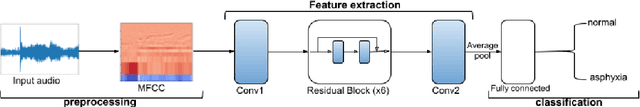

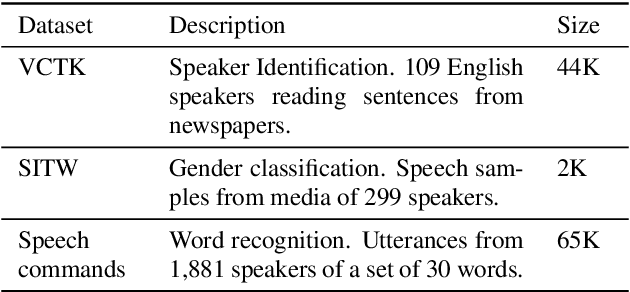

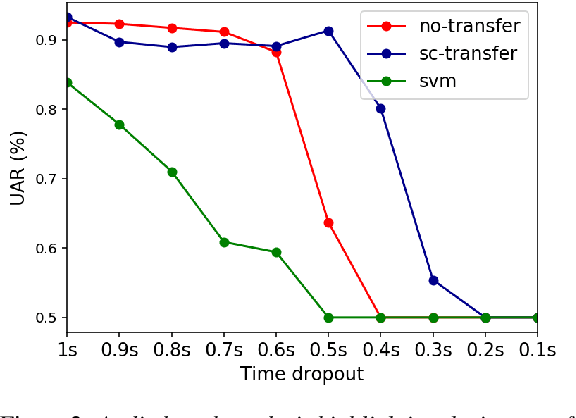

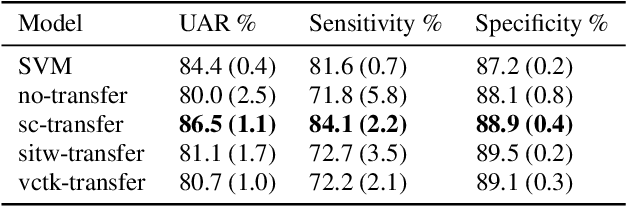

Despite continuing medical advances, the rate of newborn morbidity and mortality globally remains high, with over 6 million casualties every year. The prediction of pathologies affecting newborns based on their cry is thus of significant clinical interest, as it would facilitate the development of accessible, low-cost diagnostic tools\cut{ based on wearables and smartphones}. However, the inadequacy of clinically annotated datasets of infant cries limits progress on this task. This study explores a neural transfer learning approach to developing accurate and robust models for identifying infants that have suffered from perinatal asphyxia. In particular, we explore the hypothesis that representations learned from adult speech could inform and improve performance of models developed on infant speech. Our experiments show that models based on such representation transfer are resilient to different types and degrees of noise, as well as to signal loss in time and frequency domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge