Neural Population Geometry and Optimal Coding of Tasks with Shared Latent Structure

Paper and Code

Feb 26, 2024

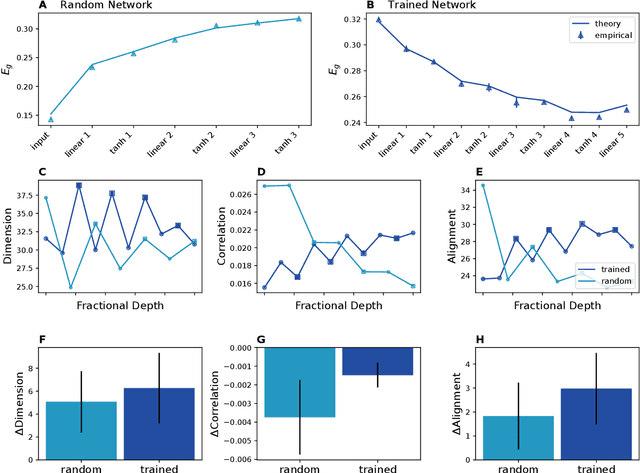

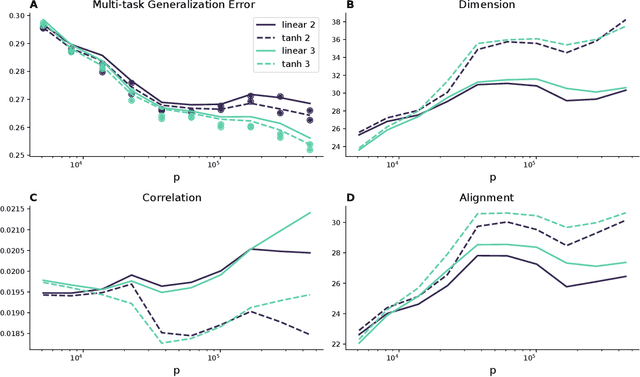

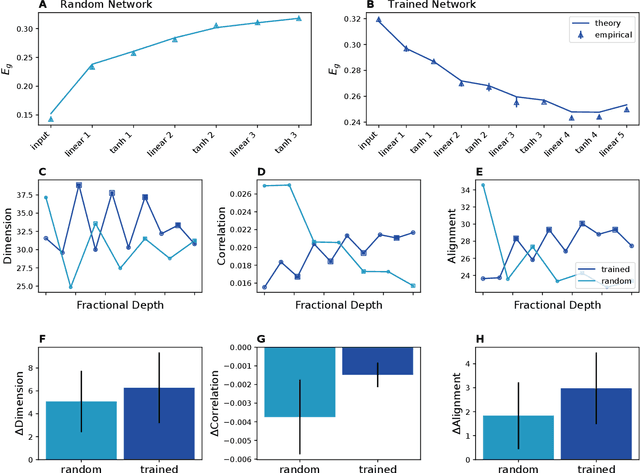

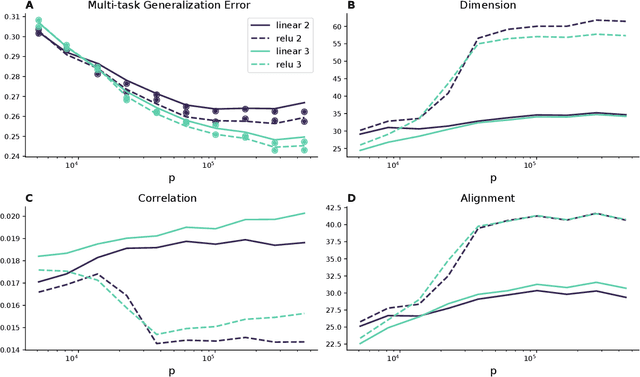

Humans and animals can recognize latent structures in their environment and apply this information to efficiently navigate the world. Several recent works argue that the brain supports these abilities by forming neural representations that encode such latent structures in flexible, generalizable ways. However, it remains unclear what aspects of neural population activity are contributing to these computational capabilities. Here, we develop an analytical theory linking the mesoscopic statistics of a neural population's activity to generalization performance on a multi-task learning problem. To do this, we rely on a generative model in which different tasks depend on a common, unobserved latent structure and predictions are formed from a linear readout of a neural population's activity. We show that three geometric measures of the population activity determine generalization performance in these settings. Using this theory, we find that experimentally observed factorized (or disentangled) representations naturally emerge as an optimal solution to the multi-task learning problem. We go on to show that when data is scarce, optimal codes compress less informative latent variables, and when data is abundant, optimal codes expand this information in the state space. We validate predictions from our theory using biological and artificial neural network data. Our results therefore tie neural population geometry to the multi-task learning problem and make normative predictions of the structure of population activity in these settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge