Neural Physicist: Learning Physical Dynamics from Image Sequences

Paper and Code

Jun 09, 2020

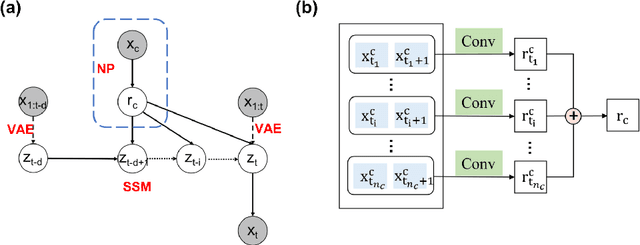

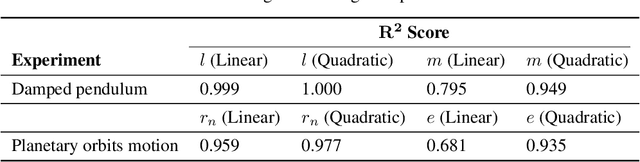

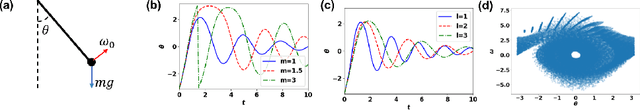

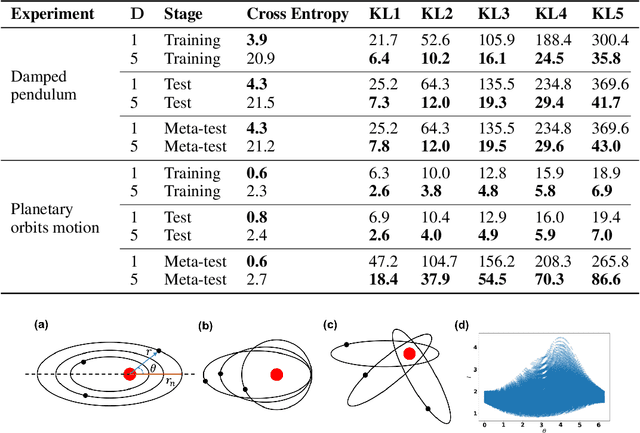

We present a novel architecture named Neural Physicist (NeurPhy) to learn physical dynamics directly from image sequences using deep neural networks. For any physical system, given the global system parameters, the time evolution of states is governed by the underlying physical laws. How to learn meaningful system representations in an end-to-end way and estimate accurate state transition dynamics facilitating long-term prediction have been long-standing challenges. In this paper, by leveraging recent progresses in representation learning and state space models (SSMs), we propose NeurPhy, which uses variational auto-encoder (VAE) to extract underlying Markovian dynamic state at each time step, neural process (NP) to extract the global system parameters, and a non-linear non-recurrent stochastic state space model to learn the physical dynamic transition. We apply NeurPhy to two physical experimental environments, i.e., damped pendulum and planetary orbits motion, and achieve promising results. Our model can not only extract the physically meaningful state representations, but also learn the state transition dynamics enabling long-term predictions for unseen image sequences. Furthermore, from the manifold dimension of the latent state space, we can easily identify the degree of freedom (DoF) of the underlying physical systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge