Neural Networks-based Equalizers for Coherent Optical Transmission: Caveats and Pitfalls

Paper and Code

Sep 30, 2021

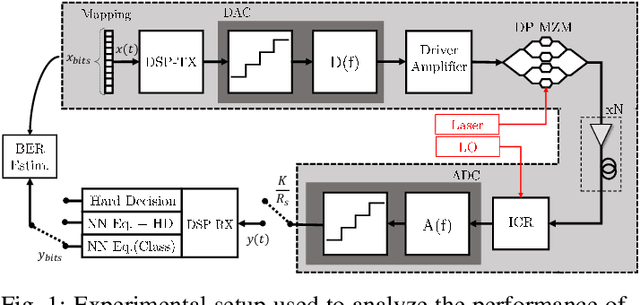

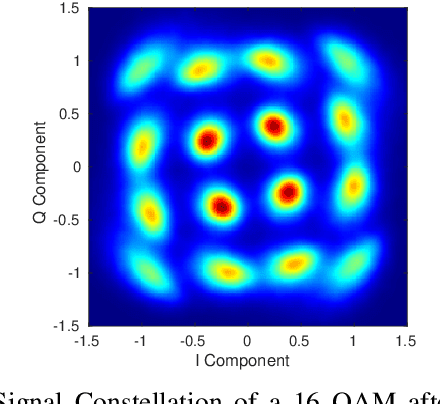

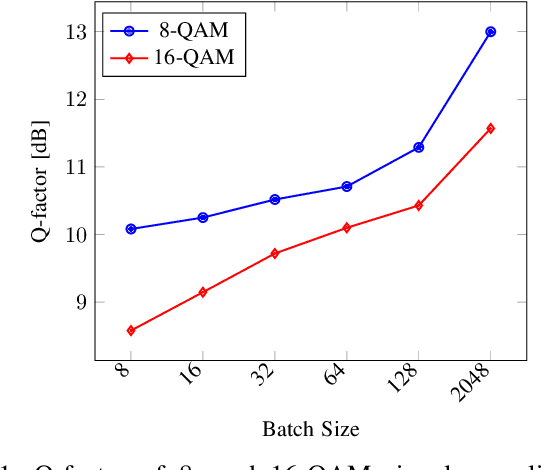

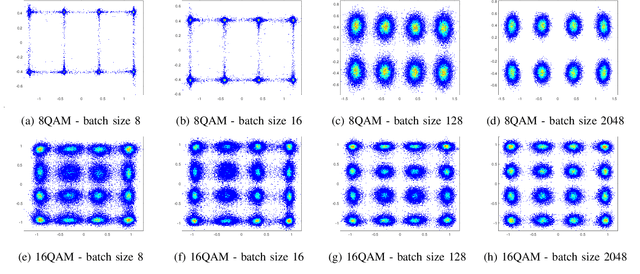

This paper performs a detailed multi-faceted analysis of the key challenges and common design caveats related to the development of efficient neural networks (NN) nonlinear channel equalizers in coherent optical communication systems. Our study aims to guide researchers and engineers working in this field. We start by clarifying the metrics used to evaluate the equalizers' performance, relating them to the loss functions employed in the training of the NN equalizers. The relationships between the channel propagation model's accuracy and the performance of the equalizers are addressed and quantified. Next, we assess the impact of the order of the pseudo-random bit sequence used to generate the -- numerical and experimental -- data as well as of the DAC memory limitations on the operation of the NN equalizers both during training and validation phases. Finally, we examine the critical issues of overfitting limitations, a difference between using classification instead of regression, and the batch size-related peculiarities. We conclude by providing analytical expressions for the equalizers' complexity evaluation in the digital signal processing (DSP) terms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge