Neural Network Pruning with Residual-Connections and Limited-Data

Paper and Code

Dec 02, 2019

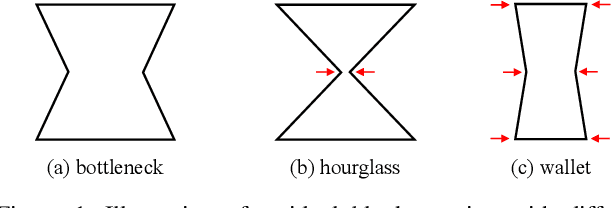

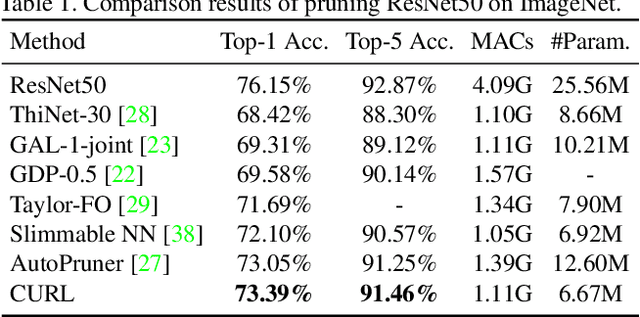

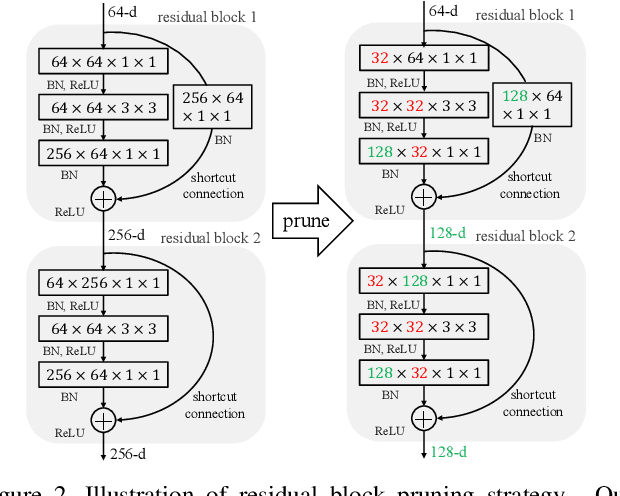

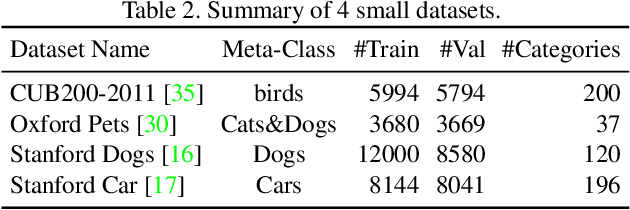

Filter level pruning is an effective method to accelerate the inference speed of deep CNN models. Although numerous pruning algorithms have been proposed, there are still two open issues. The first problem is how to prune residual connections. Most previous filter level pruning algorithms only prune channels inside residual blocks, leaving the number of output channels unchanged. We show that pruning both channels inside and outside the residual connections is crucial to achieve better performance. The second issue is pruning with limited data. We observe an interesting phenomenon: directly pruning on a small dataset is usually worse than fine-tuning a small model which is pruned or trained from scratch on the large dataset. In this paper, we propose a novel method, namely Compression Using Residual-connections and Limited-data (CURL), to tackle these two challenges. Experiments on the large scale dataset demonstrate the effectiveness of CURL. CURL significantly outperforms previous state-of-the-art methods on ImageNet. More importantly, when pruning on small datasets, CURL achieves comparable or much better performance than fine-tuning a pretrained small model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge