Neural Modeling for Named Entities and Morphology (NEMO^2)

Paper and Code

Jul 30, 2020

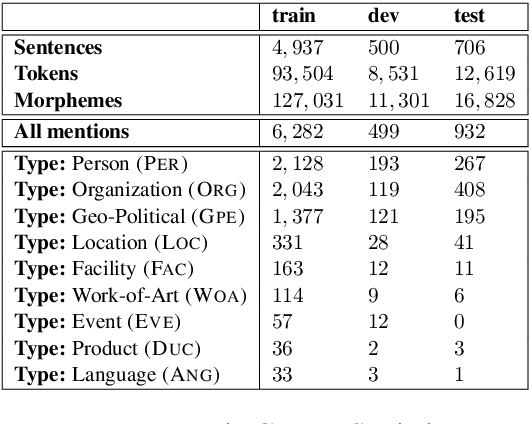

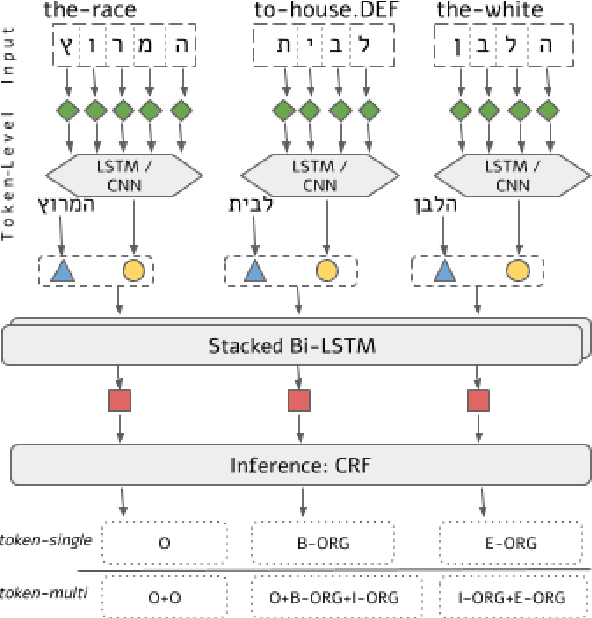

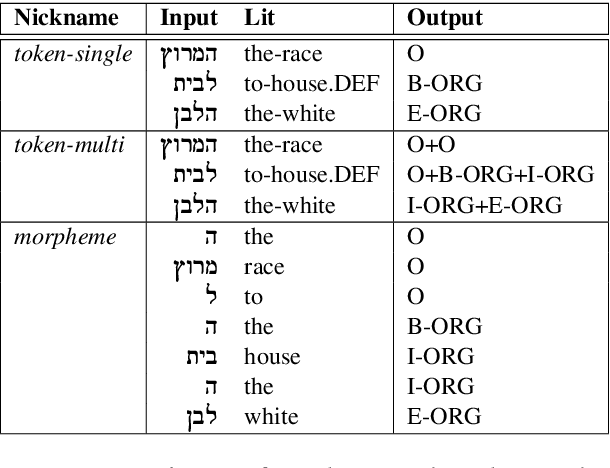

Named Entity Recognition (NER) is a fundamental NLP task, commonly formulated as classification over a sequence of tokens. Morphologically-Rich Languages (MRLs) pose a challenge to this basic formulation, as the boundaries of Named Entities do not coincide with token boundaries, rather, they respect morphological boundaries. To address NER in MRLs we then need to answer two fundamental modeling questions: (i) What should be the basic units to be identified and labeled, are they token-based or morpheme-based? and (ii) How can morphological units be encoded and accurately obtained in realistic (non-gold) scenarios? We empirically investigate these questions on a novel parallel NER benchmark we deliver, with parallel token-level and morpheme-level NER annotations for Modern Hebrew, a morphologically complex language. Our results show that explicitly modeling morphological boundaries consistently leads to improved NER performance, and that a novel hybrid architecture that we propose, in which NER precedes and prunes the morphological decomposition (MD) space, greatly outperforms the standard pipeline approach, on both Hebrew NER and Hebrew MD in realistic scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge