Neural Mesh: Introducing a Notion of Space and Conservation of Energy to Neural Networks

Paper and Code

Jul 29, 2018

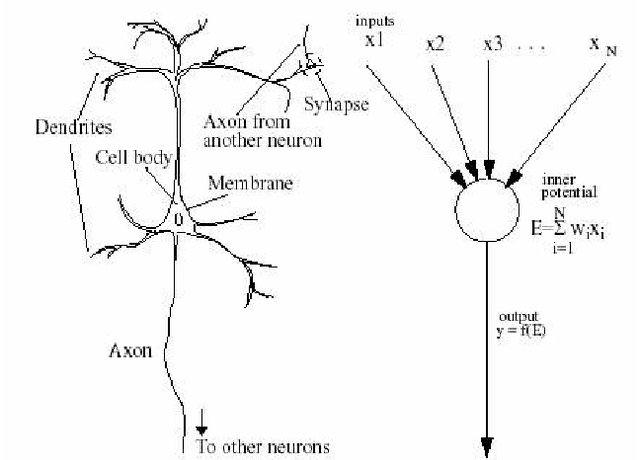

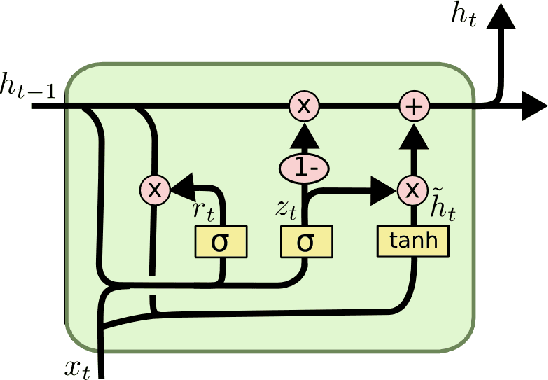

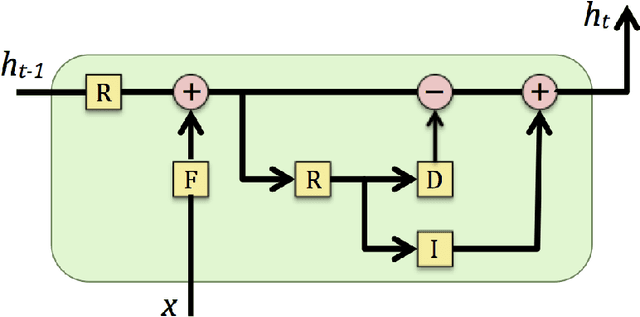

Neural networks are based on a simplified model of the brain. In this project, we wanted to relax the simplifying assumptions of a traditional neural network by making a model that more closely emulates the low level interactions of neurons. Like in an RNN, our model has a state that persists between time steps, so that the energies of neurons persist. However, unlike an RNN, our state consists of a 2 dimensional matrix, rather than a 1 dimensional vector, thereby introducing a concept of distance to other neurons within the state. In our model, neurons can only fire to adjacent neurons, as in the brain. Like in the brain, we only allow neurons to fire in a time step if they contain enough energy, or excitement. We also enforce a notion of conservation of energy, so that a neuron cannot excite its neighbors more than the excitement it already contained at that time step. Taken together, these two features allow signals in the form of activations to flow around in our network over time, making our neural mesh more closely model signals traveling through the brain the brain. Although our main goal is to design an architecture to more closely emulate the brain in the hope of having a correct internal representation of information by the time we know how to properly train a general intelligence, we did benchmark our neural mash on a specific task. We found that by increasing the runtime of the mesh, we were able to increase its accuracy without increasing the number of parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge