Neural Mesh-Based Graphics

Paper and Code

Aug 23, 2022

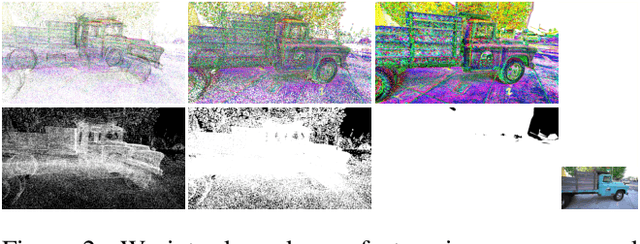

We revisit NPBG, the popular approach to novel view synthesis that introduced the ubiquitous point feature neural rendering paradigm. We are interested in particular in data-efficient learning with fast view synthesis. We achieve this through a view-dependent mesh-based denser point descriptor rasterization, in addition to a foreground/background scene rendering split, and an improved loss. By training solely on a single scene, we outperform NPBG, which has been trained on ScanNet and then scene finetuned. We also perform competitively with respect to the state-of-the-art method SVS, which has been trained on the full dataset (DTU and Tanks and Temples) and then scene finetuned, in spite of their deeper neural renderer.

* ECCV Workshop 2022 CV4Metaverse. The source code and trained models

can be obtained at

https://github.com/Shubhendu-Jena/Neural-Mesh-Based-Graphics

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge