Neural Forest Learning

Paper and Code

Nov 18, 2019

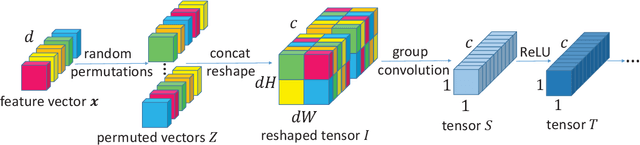

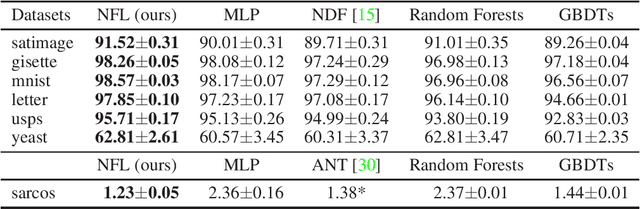

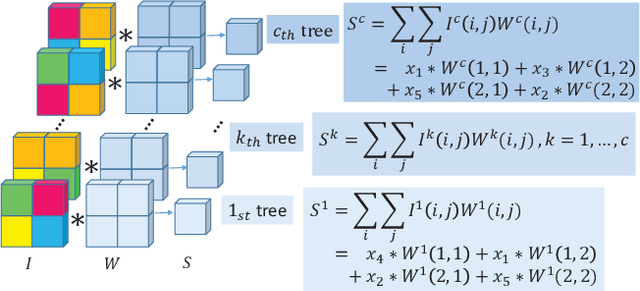

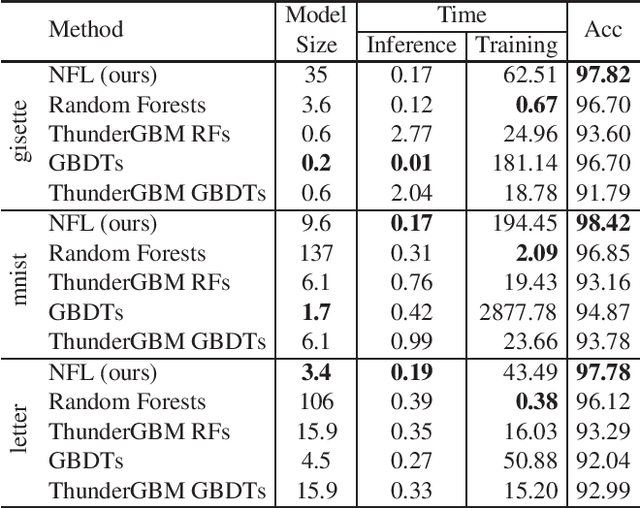

We propose Neural Forest Learning (NFL), a novel deep learning based random-forest-like method. In contrast to previous forest methods, NFL enjoys the benefits of end-to-end, data-driven representation learning, as well as pervasive support from deep learning software and hardware platforms, hence achieving faster inference speed and higher accuracy than previous forest methods. Furthermore, NFL learns non-linear feature representations in CNNs more efficiently than previous higher-order pooling methods, producing good results with negligible increase in parameters, floating point operations (FLOPs) and real running time. We achieve superior performance on 7 machine learning datasets when compared to random forests and GBDTs. On the fine-grained benchmarks CUB-200-2011, FGVC-aircraft and Stanford Cars, we achieve over 5.7%, 6.9% and 7.8% gains for VGG-16, respectively. Moreover, NFL can converge in much fewer epochs, further accelerating network training. On the large-scale ImageNet ILSVRC-12 validation set, integration of NFL into ResNet-18 achieves top-1/top-5 errors of 28.32%/9.77%, which outperforms ResNet-18 by 1.92%/1.15% with negligible extra cost and the improvement is consistent under various architectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge