Neural Embedding for Physical Manipulations

Paper and Code

Jul 13, 2019

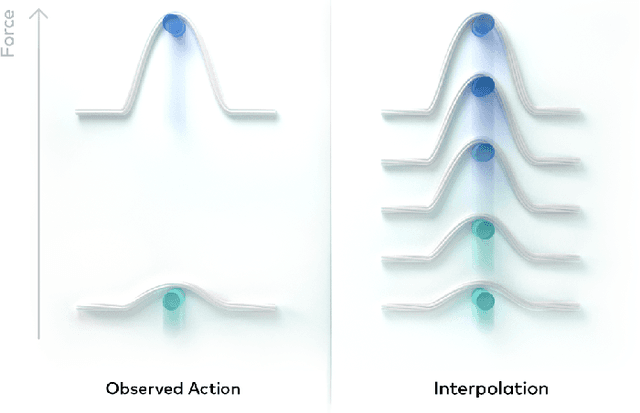

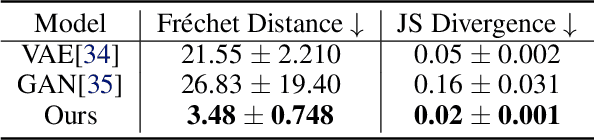

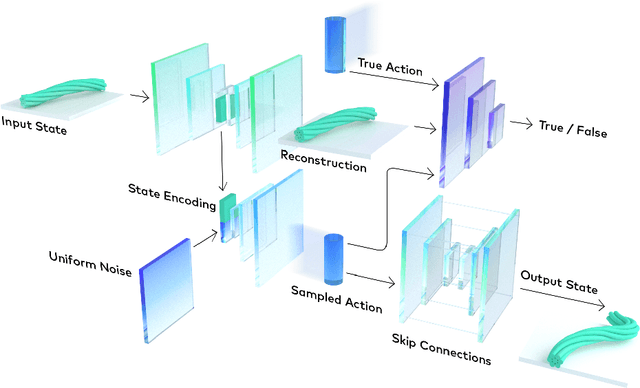

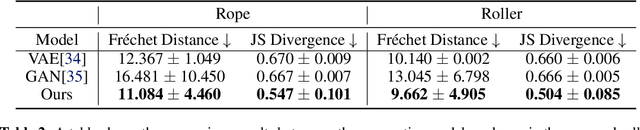

In common real-world robotic operations, action and state spaces can be vast and sometimes unknown, and observations are often relatively sparse. How do we learn the full topology of action and state spaces when given only few and sparse observations? Inspired by the properties of grid cells in mammalian brains, we build a generative model that enforces a normalized pairwise distance constraint between the latent space and output space to achieve data-efficient discovery of output spaces. This method achieves substantially better results than prior generative models, such as Generative Adversarial Networks (GANs) and Variational Auto-Encoders (VAEs). Prior models have the common issue of mode collapse and thus fail to explore the full topology of output space. We demonstrate the effectiveness of our model on various datasets both qualitatively and quantitatively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge