Neural Architecture Search Using Stable Rank of Convolutional Layers

Paper and Code

Sep 19, 2020

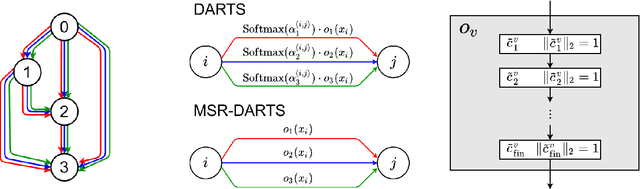

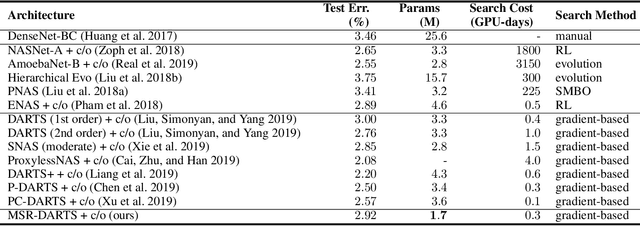

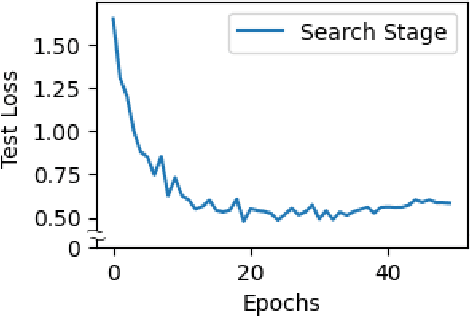

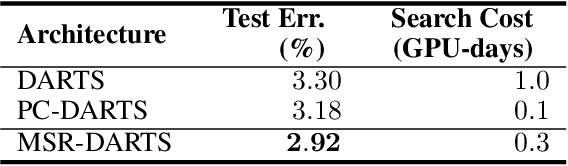

In Neural Architecture Search (NAS), Differentiable ARchiTecture Search (DARTS) has recently attracted much attention due to its high efficiency. It defines an over-parameterized network with mixed edges each of which represents all operator candidates, and jointly optimizes the weights of the network and its architecture in an alternating way. However, this process prefers a model whose weights converge faster than the others, and such a model with fastest convergence often leads to overfitting. Accordingly the resulting model cannot always be well-generalized. To overcome this problem, we propose Minimum Stable Rank DARTS (MSR-DARTS), which aims to find a model with the best generalization error by replacing the architecture optimization with the selection process using the minimum stable rank criterion. Specifically, a convolution operator is represented by a matrix and our method chooses the one whose stable rank is the smallest. We evaluate MSR-DARTS on CIFAR-10 and ImageNet dataset. It achieves an error rate of 2.92% with only 1.7M parameters within 0.5 GPU-days on CIFAR-10, and a top-1 error rate of 24.0% on ImageNet. Our MSR-DARTS directly optimizes an ImageNet model with only 2.6 GPU days while it is often impractical for existing NAS methods to directly optimize a large model such as ImageNet models and hence a proxy dataset such as CIFAR-10 is often utilized.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge