Network with Sub-Networks

Paper and Code

Aug 02, 2019

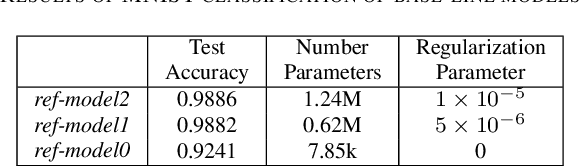

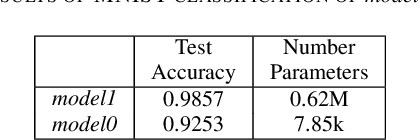

We introduce network with sub-network, a neural network which their weight layer could be removed into sub-neural networks on demand during inference. This method provides selectivity in the number of weight layer. To develop the parameters which could be used in both base and sub-neural networks models, firstly, the weights and biases are copied from sub-models to base-model. Each model is forwarded separately. Gradients from both networks are averaged and, used to update both networks. From the empirical experiment, our base-model achieves the test-accuracy that is comparable to the regularly trained models, while it maintains the ability to remove the weight layers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge