Nearest neighbor density functional estimation based on inverse Laplace transform

Paper and Code

May 22, 2018

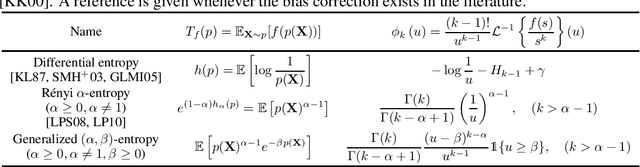

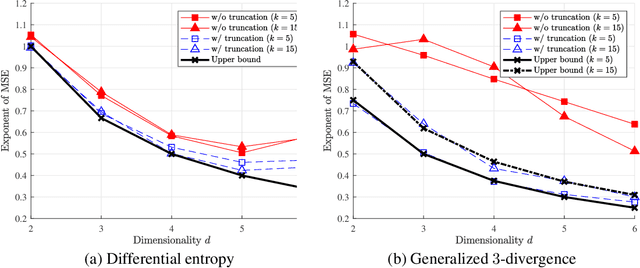

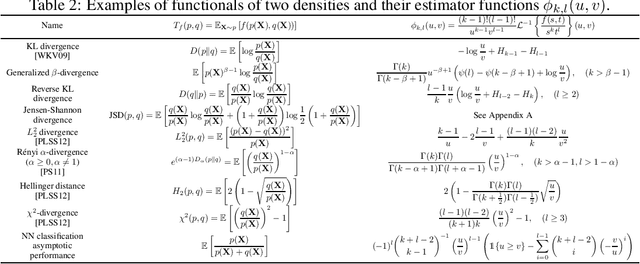

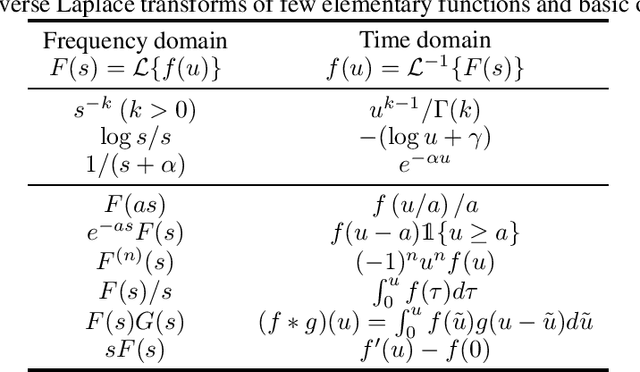

A general approach to $L_2$-consistent estimation of various density functionals using $k$-nearest neighbor distances is proposed, along with the analysis of convergence rates in mean squared error. The construction of the estimator is based on inverse Laplace transforms related to the target density functional, which arises naturally from the convergence of a normalized volume of $k$-nearest neighbor ball to a Gamma distribution in the sample limit. Some instantiations of the proposed estimator rediscover existing $k$-nearest neighbor based estimators of Shannon and Renyi entropies and Kullback--Leibler and Renyi divergences, and discover new consistent estimators for many other functionals, such as Jensen--Shannon divergence and generalized entropies and divergences. A unified finite-sample analysis of the proposed estimator is presented that builds on a recent result by Gao, Oh, and Viswanath (2017) on the finite sample behavior of the Kozachenko--Leoneko estimator of entropy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge