Navigating Demand Uncertainty in Container Shipping: Deep Reinforcement Learning for Enabling Adaptive and Feasible Master Stowage Planning

Paper and Code

Feb 19, 2025

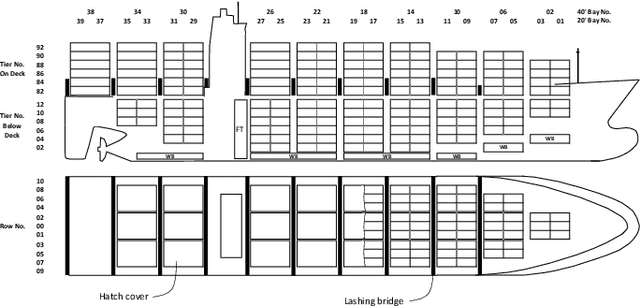

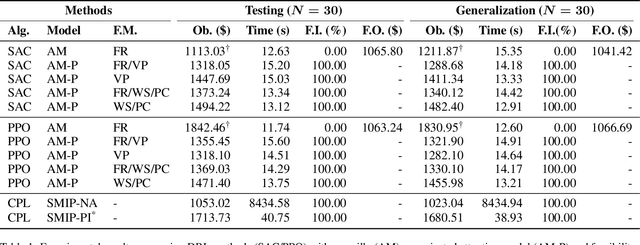

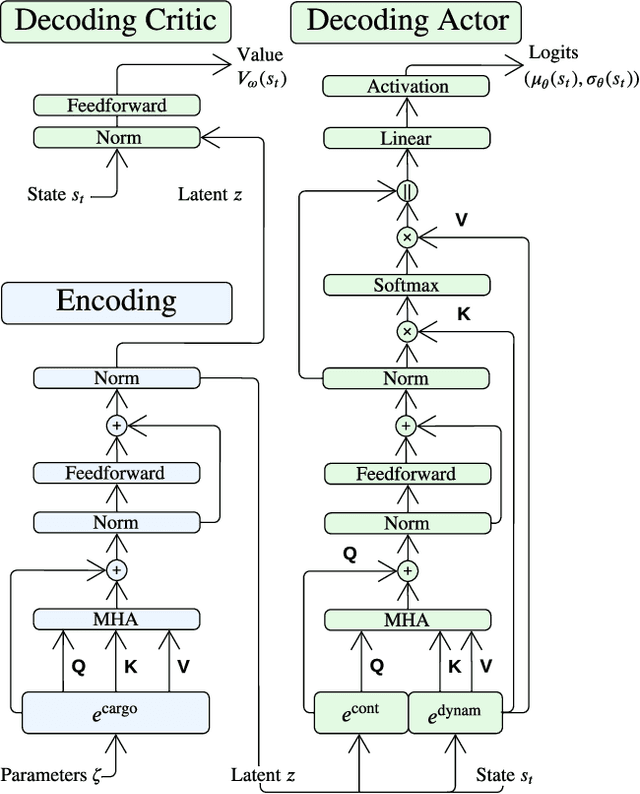

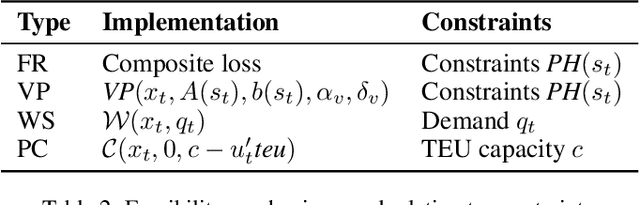

Reinforcement learning (RL) has shown promise in solving various combinatorial optimization problems. However, conventional RL faces challenges when dealing with real-world constraints, especially when action space feasibility is explicit and dependent on the corresponding state or trajectory. In this work, we focus on using RL in container shipping, often considered the cornerstone of global trade, by dealing with the critical challenge of master stowage planning. The main objective is to maximize cargo revenue and minimize operational costs while navigating demand uncertainty and various complex operational constraints, namely vessel capacity and stability, which must be dynamically updated along the vessel's voyage. To address this problem, we implement a deep reinforcement learning framework with feasibility projection to solve the master stowage planning problem (MPP) under demand uncertainty. The experimental results show that our architecture efficiently finds adaptive, feasible solutions for this multi-stage stochastic optimization problem, outperforming traditional mixed-integer programming and RL with feasibility regularization. Our AI-driven decision-support policy enables adaptive and feasible planning under uncertainty, optimizing operational efficiency and capacity utilization while contributing to sustainable and resilient global supply chains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge