MvSR-NAT: Multi-view Subset Regularization for Non-Autoregressive Machine Translation

Paper and Code

Aug 19, 2021

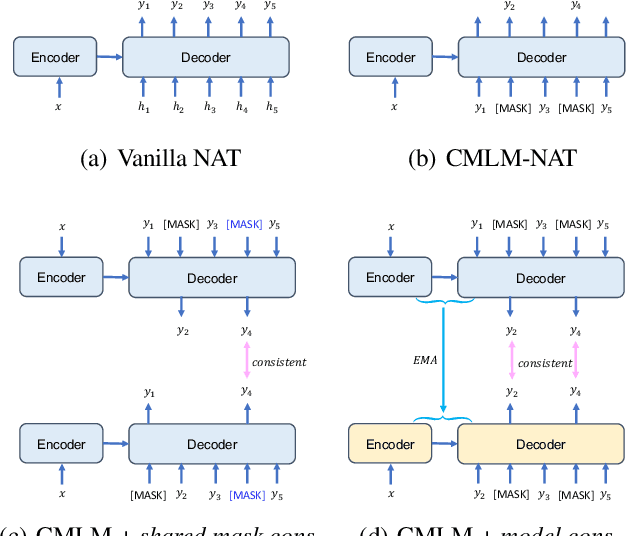

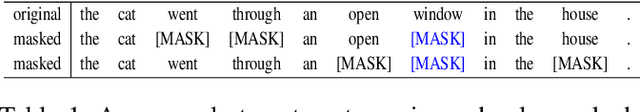

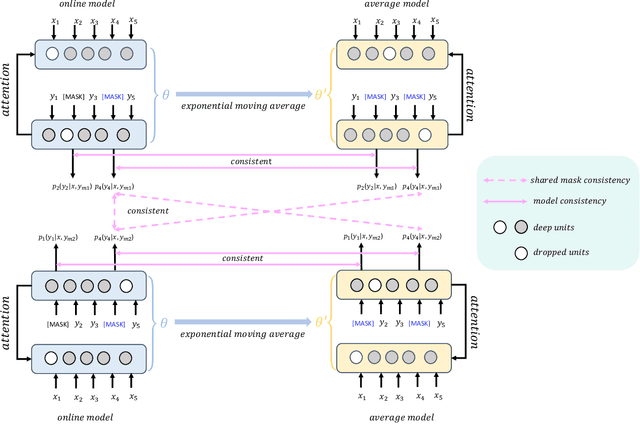

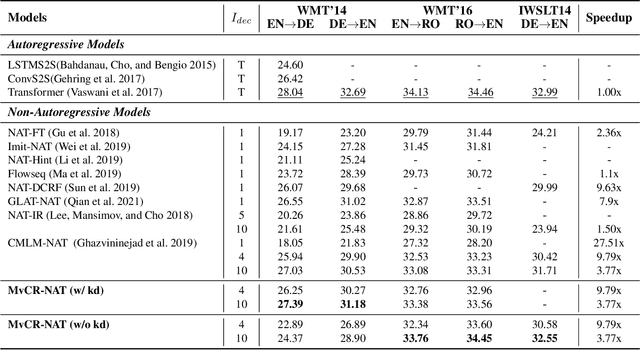

Conditional masked language models (CMLM) have shown impressive progress in non-autoregressive machine translation (NAT). They learn the conditional translation model by predicting the random masked subset in the target sentence. Based on the CMLM framework, we introduce Multi-view Subset Regularization (MvSR), a novel regularization method to improve the performance of the NAT model. Specifically, MvSR consists of two parts: (1) \textit{shared mask consistency}: we forward the same target with different mask strategies, and encourage the predictions of shared mask positions to be consistent with each other. (2) \textit{model consistency}, we maintain an exponential moving average of the model weights, and enforce the predictions to be consistent between the average model and the online model. Without changing the CMLM-based architecture, our approach achieves remarkable performance on three public benchmarks with 0.36-1.14 BLEU gains over previous NAT models. Moreover, compared with the stronger Transformer baseline, we reduce the gap to 0.01-0.44 BLEU scores on small datasets (WMT16 RO$\leftrightarrow$EN and IWSLT DE$\rightarrow$EN).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge