Music Transcription Based on Bayesian Piece-Specific Score Models Capturing Repetitions

Paper and Code

Aug 18, 2019

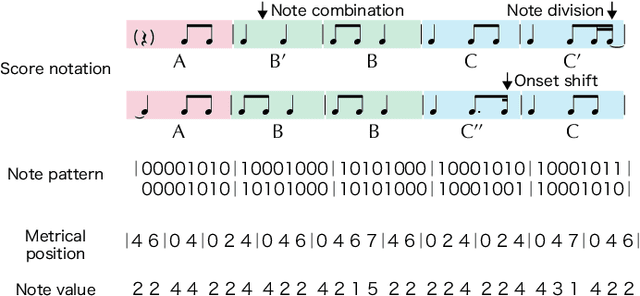

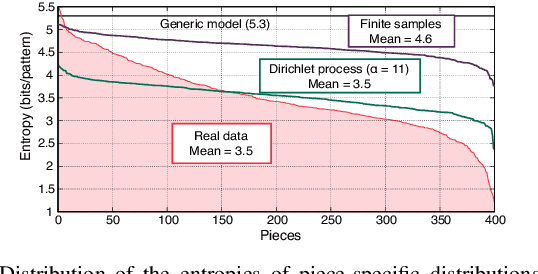

Most work on models for music transcription has focused on describing local sequential dependence of notes in musical scores and failed to capture their global repetitive structure, which can be a useful guide for transcribing music. Focusing on the rhythm, we formulate several classes of Bayesian Markov models of musical scores that describe repetitions indirectly by sparse transition probabilities of notes or note patterns. This enables us to construct piece-specific models for unseen scores with unfixed repetitive structure and to derive tractable inference algorithms. Moreover, to describe approximate repetitions, we explicitly incorporate a process of modifying the repeated notes/note patterns. We apply these models as a prior music language model for rhythm transcription, where piece-specific score models are inferred from performed MIDI data by unsupervised learning, in contrast to the conventional supervised construction of score models. Evaluations using vocal melodies of popular music showed that the Bayesian models improved the transcription accuracy for most of the tested model types, indicating the universal efficacy of the proposed approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge